A platform for building AI, ML, & Computer Vision pipelines using real-time sensing data

INFINIWORKFLOW runs in a browser with the following main UI components

The application menu allows the following functionality

The tool catalog allows you to add new tools as nodes into your flowgraph

The first tab will show all the tools and the remaining tabs show a subset of tools such as related to computer vision or ML etc. You can hover over the tab icon and a tooltip will show you the category name. Once a category tab is selected you can further refine the list of tools shown by entering keywords input, this is useful to quickly find a particular tool you want to insert into your workflow.

Hovering over the tools shows a tooltip description of the tool. To insert a tool into the workflow can be done with the following gestures:

Drag and Drop

Insert a node with edge automatically can be done by selecting the node you wish to connect it to and then double clicking the tool - a new node will be inserted and a link will automatically be added as well.

Infiniworkflow has several hundred nodes avaialble, which allows for many possibilities but also can be daunting for new users or can stand in the way of users who are only looking to build a specific application (if you are building a Data Science workflow, you probably don't need to see the many Color Correction nodes that are available). As such, Layouts have been added as a feature for controlling which categories and nodes show up in your Tool Catalog. They are straightforward to use, but by no means necessary to learn about if you do not wish to change how your Tool Catalog appears, so you may skip this section and not lose any critical information.

Layout can be defined as a set of categories, and the respective nodes inside them, that will appear within the Tool Bar if the Layout is selected. You can switch between Layouts by clicking on the Layouts button, found in the bottom right corner of the screen (see video below). By default the Layout is "All", as that shows all categories and all nodes inside those categories. However, this is but 1 of a handful of pre-made Layouts that are available. These pre-made Layouts include "Computer Vision", "Data Science", and "Machine Learning"; as can be expected, when we switch to one of those Layouts, only the categories / nodes relevant to the respective topic (Computer Vision, for example) will appear. The video below showcases how the toolbar changes when switching between Layouts.

In addition to the Layouts seen here, users can also choose to add their own custom Layouts. To add a Layout, go to Settings, which can be found in the bottom right side by the Layouts button, and click on the "New Layouts" option within Settings. Enter in your Layout name, and save. If you click on the Layouts button now, you will now see that you new Layout has been added to the list of other Layouts. These steps are showcased below.

To begin changing how the categories / nodes appear within your Layout's Tool Catalog, click on the Layouts button and then click on the Layout you wish to change. Now that you're in, you may begin moving both categories and nodes as you like. The following operations are possible:

I. Moving single Nodes into the Trash (these nodes will no longer show up in the category they were in previously):

II. Moving an entire Category to the Trash (the entire category will no longer be in view, and all nodes within the category will go to the Trash):

III. Moving single Nodes into different Categories (the Node's symbol will be unchanged, but the Node itself will now belong to the new category):

IV. Moving an entire Category into another Category (the second Category will be the only one to appear in the Categories list on the top of the Tools Catalog, but all nodes within both categories can now be found in this Category):

If you are unhappy with your Layout and want to start over, simply go to the Settings button and click on the "Reset Layout" button. Alternatively, you may delete the Layout all together by clicking on the "Delete Layout" button within Settings. NOTE: Make sure that you are IN the Layout that you want to reset / delete, or you may end up resetting / deleting the wrong Layout! Do this by clicking on the desired Layout after clicking the Layouts button before making any changes.

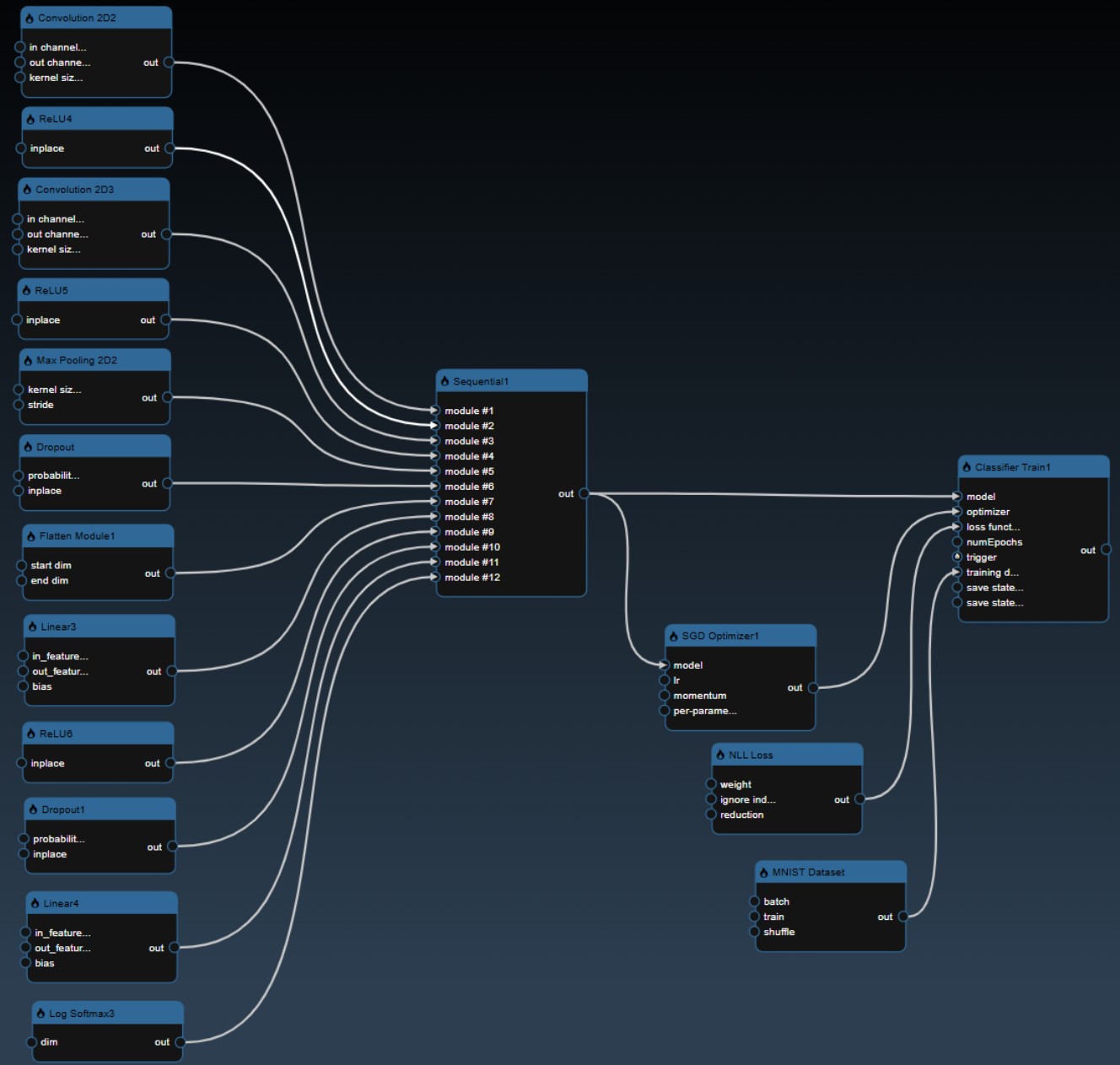

The flowgraph is used to construct your workflow that comprises of Nodes and Edges. Nodes represents functions that have input and generate outputs. These nodes are created by dragging tools into your workflow from the Tools Catalog. A node's input and output have 'ports' which are where edges can be connected. Edges are connections between the output port of an upstream node to the input port of a upstream node. Any inputs ports that are unconnected can also be set to specific values using the Parameter Editor. The color of the node indicates the following:

| C++ Nodes (can be executed on GPU or CPU) | |

| Python Nodes (can be executed on GPU or CPU) | |

| Cuda Kernels (always executed on the GPU) | |

| Widget nodes (executed on the CPU) |

The flowgraph has the following components:

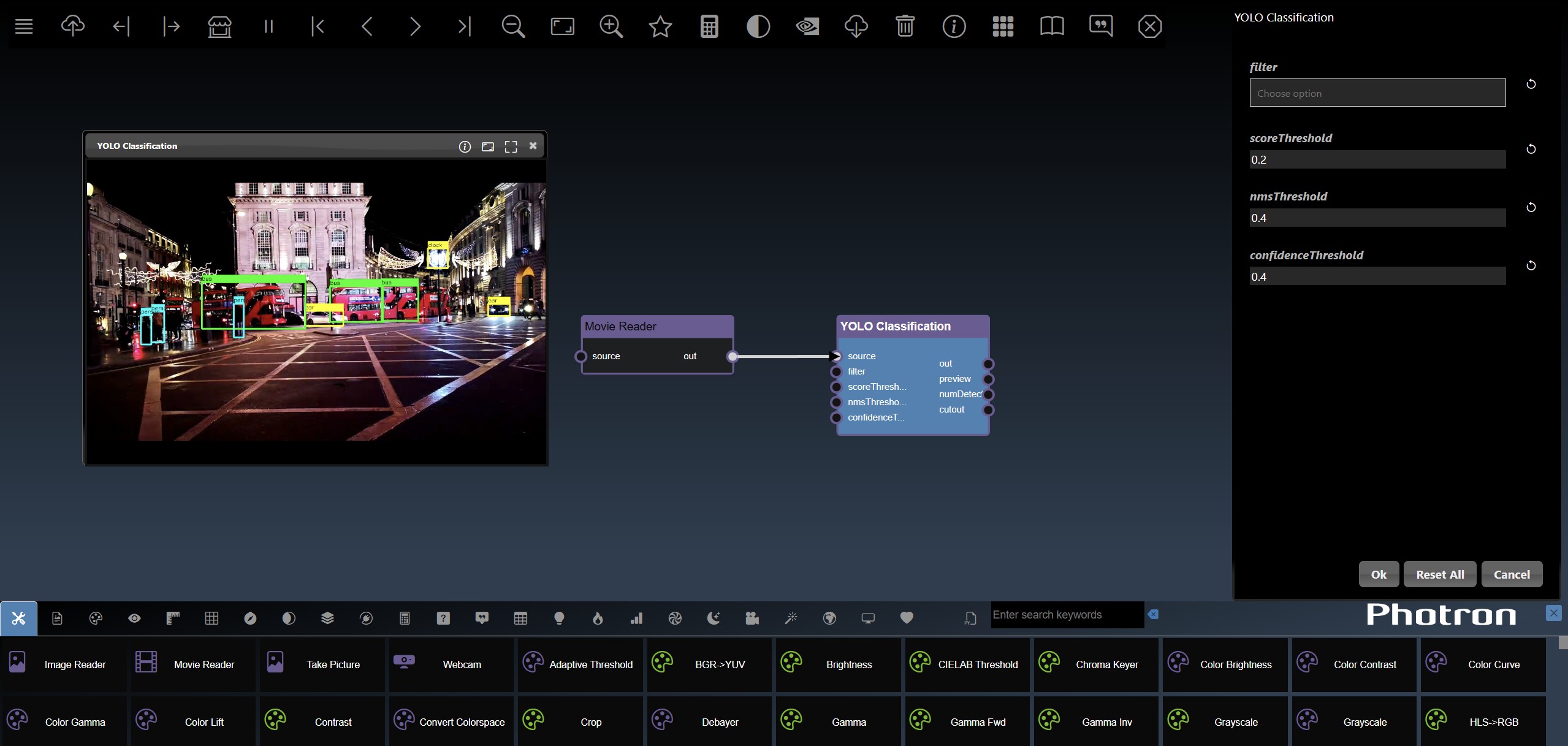

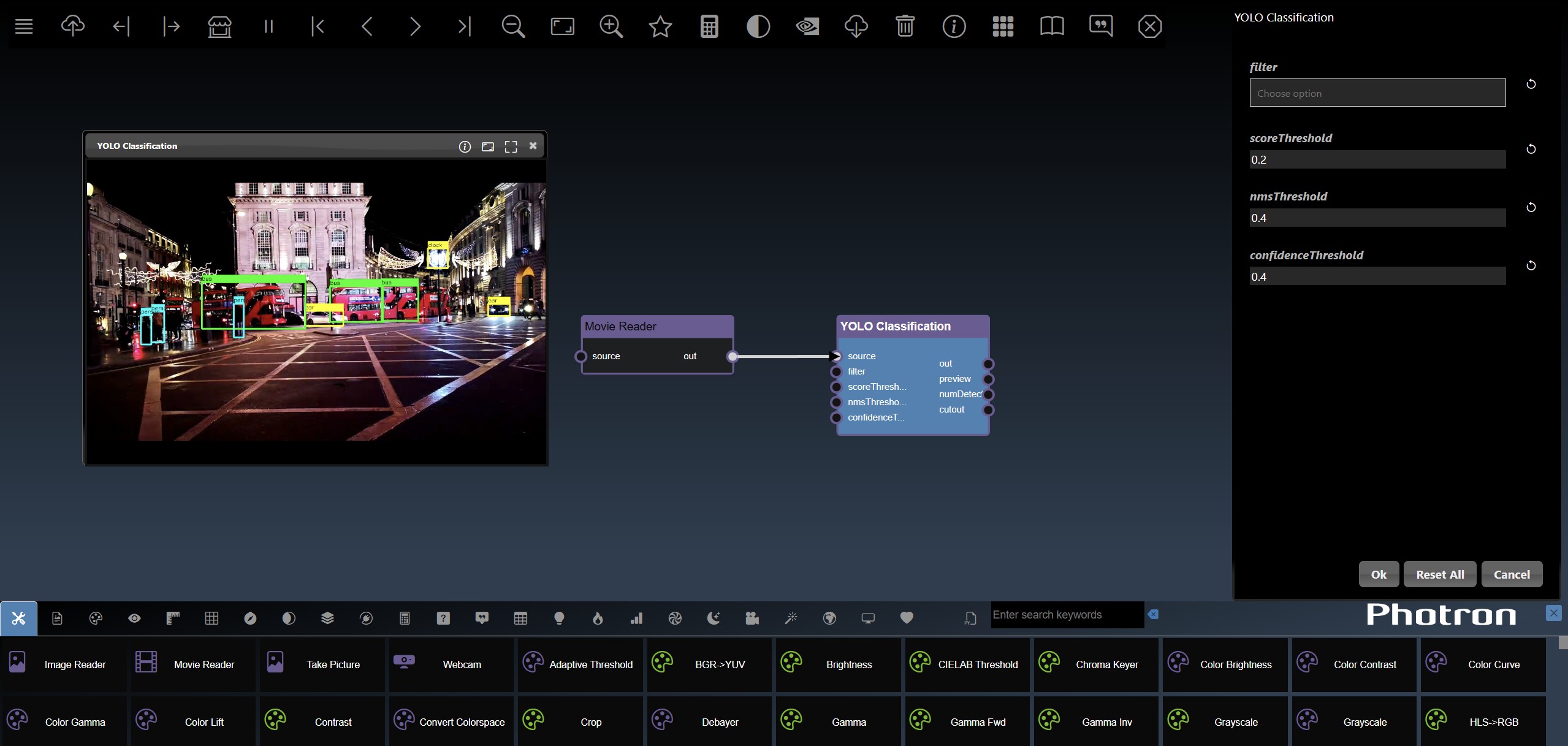

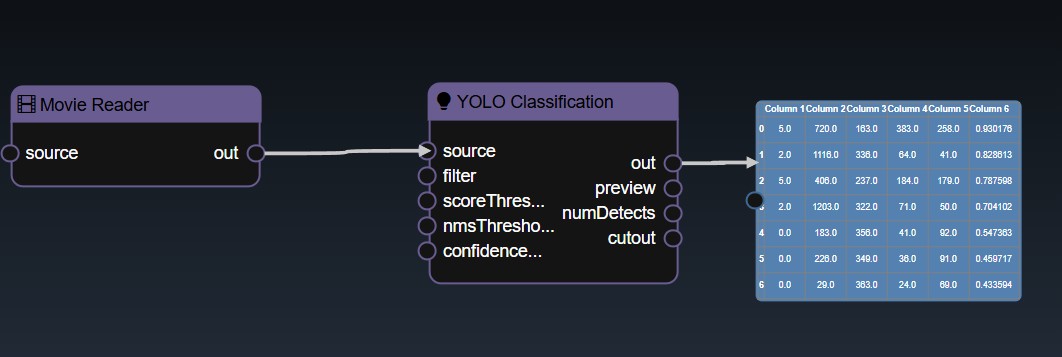

Adding an Edge to connect the output of an upstream node to the input of a downstream node. In this example, we want to have the Yolo Classification be done on a Movie Reader, we thus connect the output of the Movie Reader node to the input of the Yolo Classification node. Click on the source port of the upstream node and the drag to the destination port of the downstream node. A green line color indicates that the edge is allowed which is based on the type matching between the two ports

If the types do not match then a red line color indicates that the edge is invalid

There are a few exceptions to allow different types to be connected to each other. For example, the image2D type, which represents a 2D image in system memory, can be connected to a type cuda2D, a 2D image in GPU memory and vice-versa. The exceptions are as follows:

| Output Type | Input Type |

|---|---|

| * | Any type |

| Any type | * |

| image2D | cuda2D |

| cuda2D | image2D |

| double, int, bool or numeric | double, int, bool or numeric |

| double, int, bool or numeric | double, int, bool or numeric |

| numeric2 | double2, int2 |

| double2, int2 | numeric2 |

| numeric3 | double3, int3 |

| double3, int3 | numeric3 |

| torch.nn.Module | torchvision.model |

| torchvision.model | torch.nn.Module |

Removing an Edge disconnects the output of upstream node to the input of a downstream node. In this example we no longer want the Yolo Classification be done on the output of the Movie Reader. Hover over the edge, it will indicate it can be deleted when a change in cursor happens, then click on the edge to delete it

To see the type of a node's input or output, hover over the port and it will show as a tooltip

Select a node can be done with a single click on the node. The node is shown highlighted in blue when it is selected

Clicking and dragging on a node will select it and also allow you to move the node around in the flowgraph

Holding the shift key whilst clicking allows you to add more nodes to the selection

To deselect all the nodes you can click on the flowgraph

Multiple nodes can also be selected by doing a rectangular selection, hold the alt key and drag the mouse which shows a box selection which will select all the nodes in the rectangle intersection after the mouse is released

To delete a node can be done by clicking the delete key

To 'View & Edit' a node, double click the node, if there are multiple outputs a menu will allow you to select which output you wish to view

To View a specific output you can double click on the output port of the node

You can also 'rip' a node to remove it from the edges by shaking the node quickly

To zoom into the center of the flowgraph you can press the + hotkey

To zoom out of the flowgraph you can press the - hotkey

To zoom fit flowgraph, showing all nodes in viewport, you can press the f hotkey

If you wish to view a specific output of a node, you can double click the output port directly and avoid needing to select from the menu

Click on the trigger icon for input ports to trigger the action

Mandatory inputs will be show in a rectangular border whereas optional inputs are drawn in a circular border:

To pan the flowgraph you can click and then drag the mouse. This allows you to navigate the workflow in the flowgraph when it becomes more complex

You can also zoom in and out using mouse scroll wheel or zoom gesture

When nodes are not in the visible viewport then indicators are shown on the boundary of the viewport. The indicators are useful to highlight scrolling or zooming will yield hidden nodes.

Clicking the left mouse button over the node brings up the node context menu and also selects the node

Inspect and adjust functions

Node attribute functions

Performance related functions

Input/output port related functions

ML functions are available when ML nodes are selected

Clipboard functions

Experimental functions

When you bring up the context menu without a node selected, the flowgraphs viewport functions are shown:

The Parameter Editor allows you to edit the parameters of the currently edited node

The UI consists of the tool icon and the name of the node that is being editoed, followed by the list of input parameters of the edited node and finally the dialog buttons. The description link, which shows the name of the edited node, when clicked will open a webpage that has the description of the tool. The input parameters are shown for any inputs that are not connected via the flowgraph.

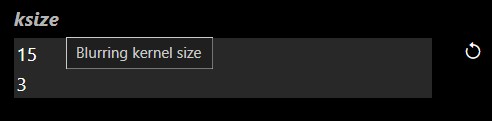

Hovering over the parameter will show the description of the parameter:

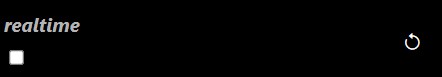

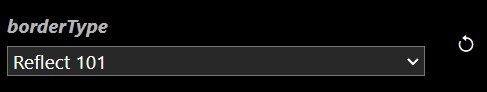

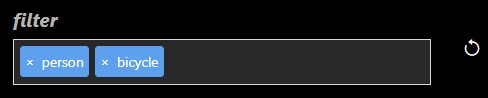

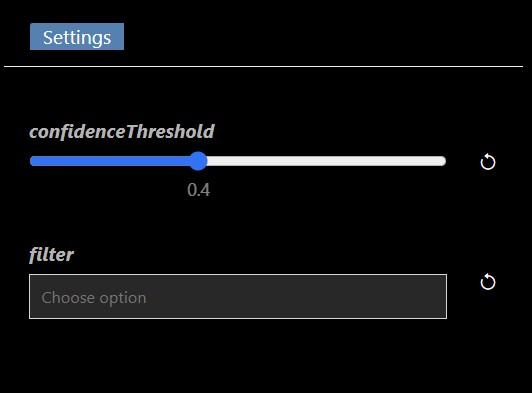

The dialog buttons allow you to close the dialog - either you can accept the changes made by clicking OK, or reject any changes made to the parameters by clicking Cancel. A button also allows you to Reset All the parameters to the original default tool settings. The UI for each parameter input will be based on the type of the input, but all of them will have a reset icon that allows you to reset that particular parameter input back to its default value. The different types of parameter UI controls are as follows

| UI Look | Example | Description |

|---|---|---|

| Numeric textfield |  |

A numeric input allows you to enter a value. There are also step controls that allow you to increment one unit up and down. Below the numeric input you can drag, a range UI will appear. Whilst dragging, holding the shift key will do smaller step changes whilst holding control key will do larger step changes. |

| Numeric2 textfield |  |

Two numeric inputs allow you to enter both numerical values. Below the numeric input you can drag, a range UI will appear. Whilst dragging, holding the shift key will do smaller step changes whilst holding control key will do larger step changes. |

| Numeric slider |  |

If the slider has minimum and maximum values a slider appears Whilst dragging, holding the shift key will do smaller step changes whilst holding control key will do larger step changes. |

| Numeric2 slider |  |

Two numeric inputs with sliders that can optionally be locked together to modify both values at the same time. Whilst dragging, holding the shift key will do smaller step changes whilst holding control key will do larger step changes. |

| Checkbox |  |

A checkbox toggle to allow you to set values of true or false |

| Selection menu |  |

A selection menu allows you to set the value to one of the predefined values from a permitted set of values in the selection menu |

| Multi selection menu |  |

Multi selection allows you to add tags of permitted values. Click on the widget and a list of permitted values will show up. In some cases youur own user defined values that are not in the permitted set of values |

| Textfield |  |

A multi line textfield to allow you to enter the value for string parameters |

| Point |  |

A point allows you to set the values with a textfield and also has an icon that when clicked opens the viewer and you can select a point by clicking in the image directly |

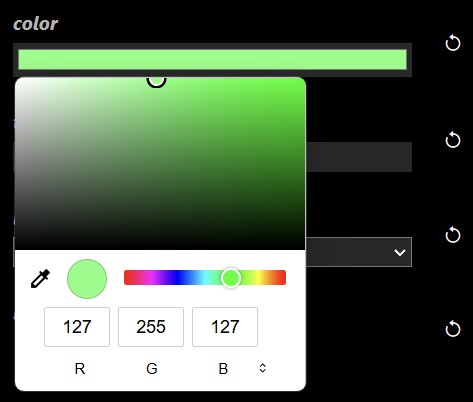

| Color |  |

A color button when clicked allows you to set the color using a color dialog

|

| Curve |  |

An icon when pressed opens the bezier curve editor

|

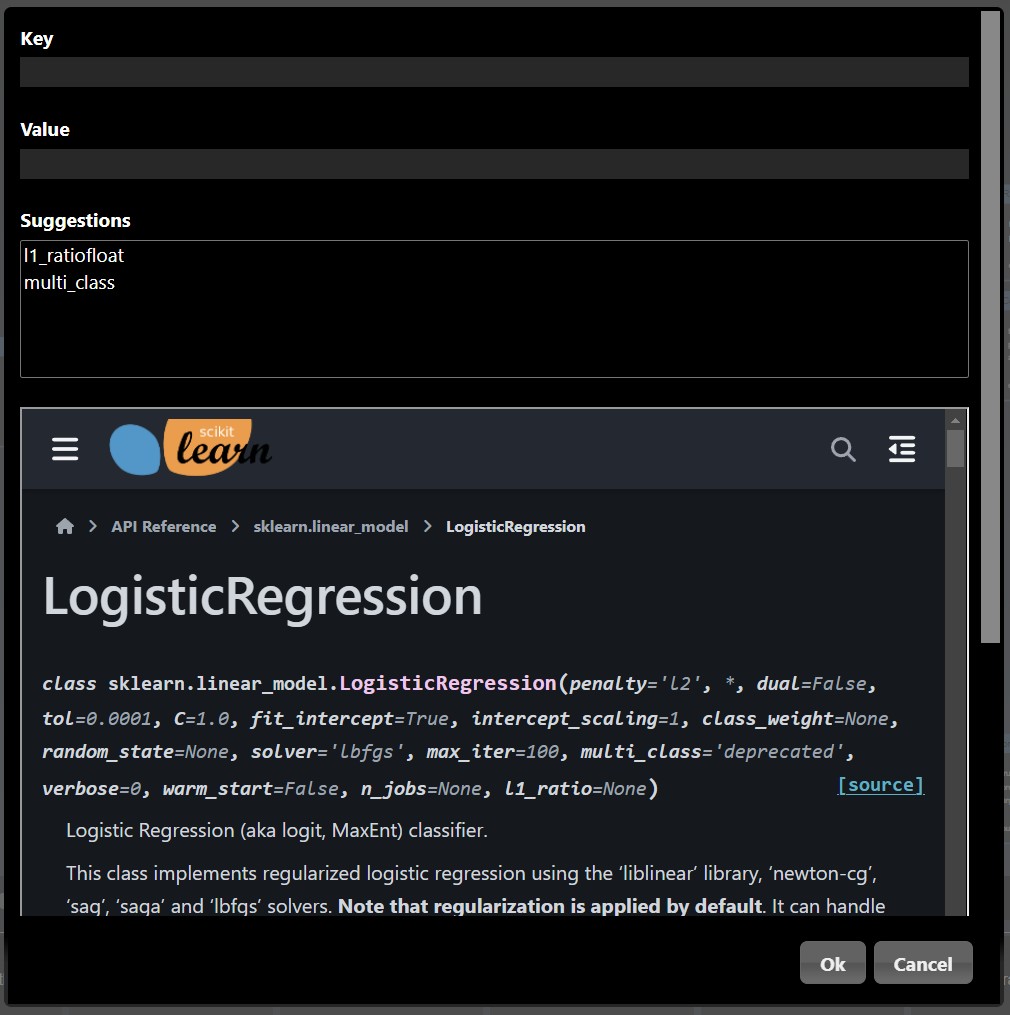

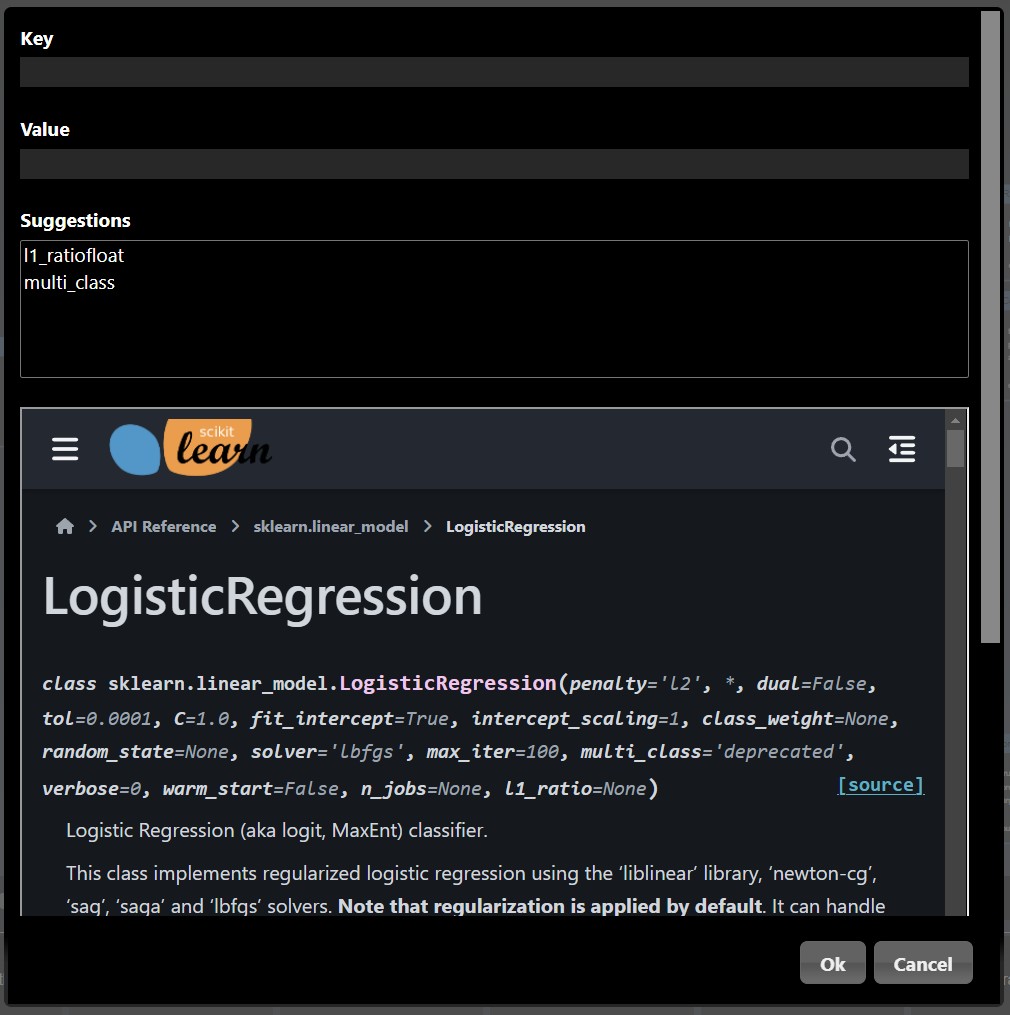

| Map |  |

A map is a series of key/value pairs.

Clicking on the widget opens a dialog editor that shows documentation as well as

allow you to enter the key abd value pair

|

| Filebrowser |  |

An icon when pressed opens the IO Dialog that allows you to set the file location. The prefix ${assets} is used to specify the file location is in the assets folder. |

| Tabs |  |

Some of the tools also have a Tab user-interface to layout the controls into different tabs. |

The viewer allows you to view the outputs of the currently viewed node

A node can be viewed using the node context menu and selecting 'View' or 'View & Edit'. When viewing a node with multiple outputs a menu will ask which output to view (alternatively, if you wish to view a specific output of a node, you can double click the output port directly and avoid needing to select from the menu)

The viewer also has controls to zoom and pan. Using the mouse scroll wheel or zoom gesture you can also then pan by dragging the image. When zoomed in, a thumbnail is shown of the full image together with a slider to set the zoom amount.

Point parameters in the Parameter Editor can be set in the viewer. Select the overlay icon and then click in the viewer to set the location of the point:

Only one node can be viewed at one time in the viewer. However, the flowgraph can also show multiple the output of multiple nodes at the same time. For example, using the 'Thumbnail Image Display' or 'Full Image Display' tool allows you to show images that are drawn in the flowgraph directly. Both the 'Thumbnail Image Display' or 'Full Image Display' allow you to maximize or minimize the image view by hovering over the right hand corner and clicking the icon. Additional Display Nodes are available that can be used to view different types of outputs directly on the flowgraph.

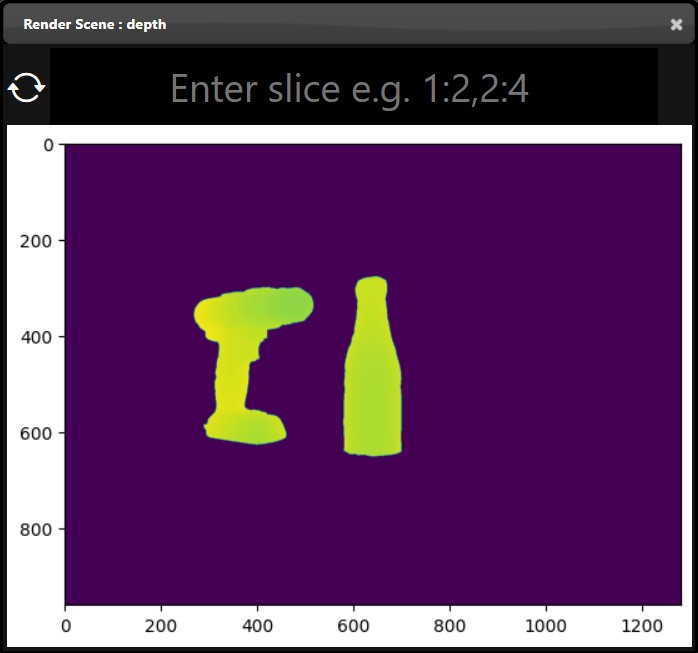

The viewer, on top of displaying images, has specific UI to display mutli-dimensional data:

Dataframes represent 2D tables and are implemented using the Pandas Python module. The viewer displays the DataFrame as a HTML table. Additional controls allow you to slice a set of the rows and columns, in the example below we slice rows [30-40). The icon allows different views of the table including showing the sliced rows and columns, with red cells represent missing data, a summary description of the statistics of each column, a line chart of the numerical columns and the description of the types of each column:

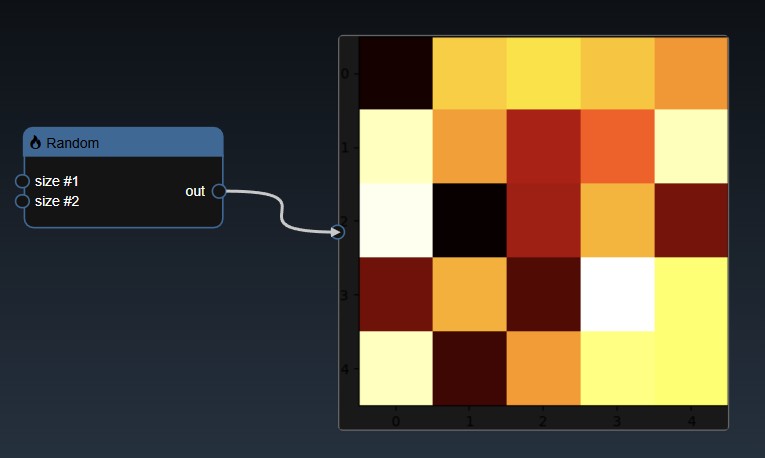

Numpy represents multidimensional numerical arrays and are implemented using the Numpy Python module. You can set a matrix using the Set Matrix tool. The viewer can display Numpy arrays in a variety of different visualizations where it will select the most useful visualization first and by clicking the allows you to visualize other representations of the arrays. Slicing controls are also available to reduce the tensor to a subset of its numerical data.

A 1D dimensional array can be randomly generated or set using the Set Matrix tool, with numbers separated by spaces, commas, semicolons, or tabs. It can be viewed as a histogram; a dot plot; a line chart and a histogram chart:

A 2D dimensional array can be randomly generated or set using the Set Matrix tool, with rows of numbers each separated by spaces, commas, semicolons, or tabs. It can be viewed as a heatmap; a 3D height map plot; a line chart and a table:

A 2D dimensional array can be randomly generated or set using the Set Matrix tool, with entries separated by commas where each entry can be a number or a list denoted by []. A 3D dimensional tensor can be viewed as a 3D plot and as a list of lists:

Tensors represent multi-dimensional numerical arrays and are implemented using the PyTorch Python module. The viewer can display tensors in a variety of different visualizations where it will select the most useful visualization first and by clicking the allows you to visualize other representations of the tensor. Slicing controls are also available to reduce the tensor to a subset of its numerical data.

A 1D dimensional tensor can be viewed as a histogram; a dot plot; a line chart and a histogram chart:

A 2D dimensional tensor can be viewed as a heatmap; a 3D height map plot; a line chart and a table:

A 3D dimensional tensor can be viewed as a 3D plot and abbreviated tensor list:

Images can also be converted to tensors (using the 'Image to Tensor' tool) and they will be viewed as a 3D image; a 3D height map plot; a 3D color space plot, and abbreviated tensor list:

Beyond displaying images and matrices, INFINIWORKFLOW has specific UI to display one-dimensional audio data:

Audio is represented by a list of numerical intensity values over time and they are implemented using the Numpy Python module. You can get audio using the Read Audio tool for audio files or the Input Audio tool for streaming input through the microphone. The viewers in Audio nodes can display audio arrays in two different ways: a waveform and a spectrogram. It will default to the waveform visualization first, which shows the loudness of the sound at every sample over time. By clicking the , it allows you to cycle through visualizations. The other visualization is a spectrogram, which is a colormap of frequencies over time where colors represent the volume of each frequency in decibels (dB).

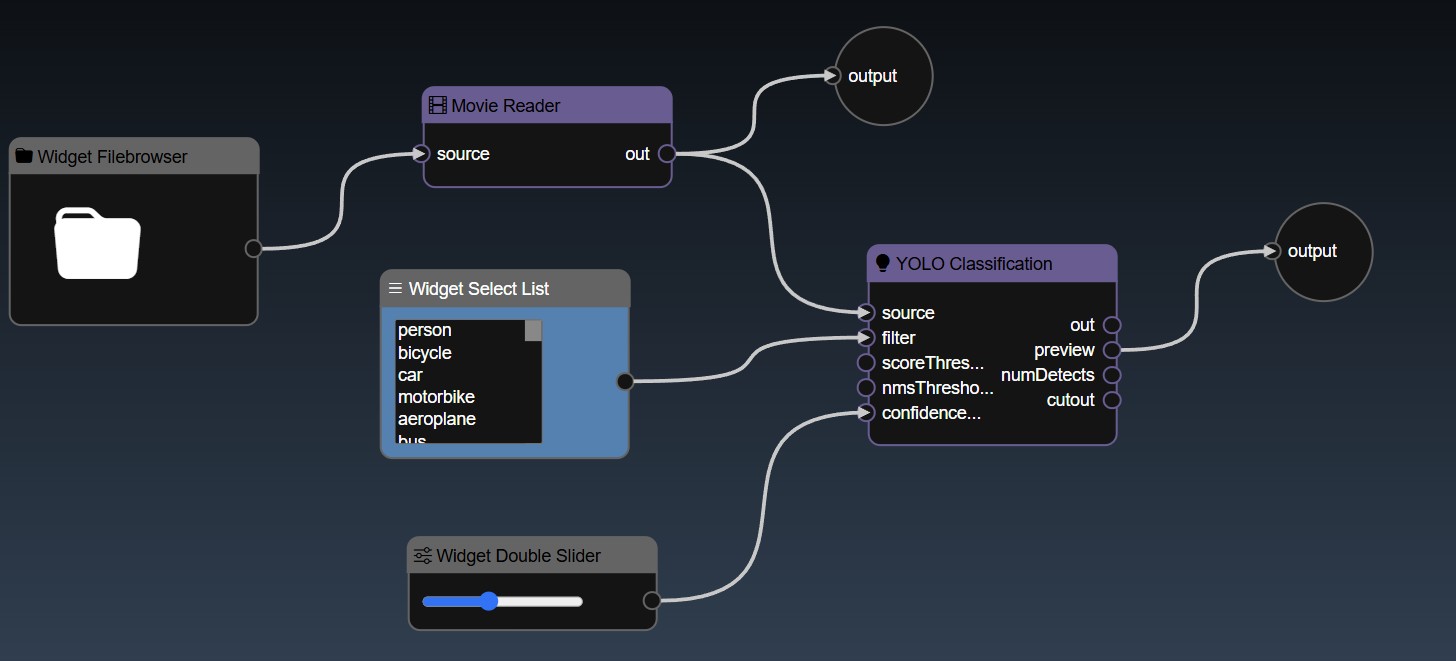

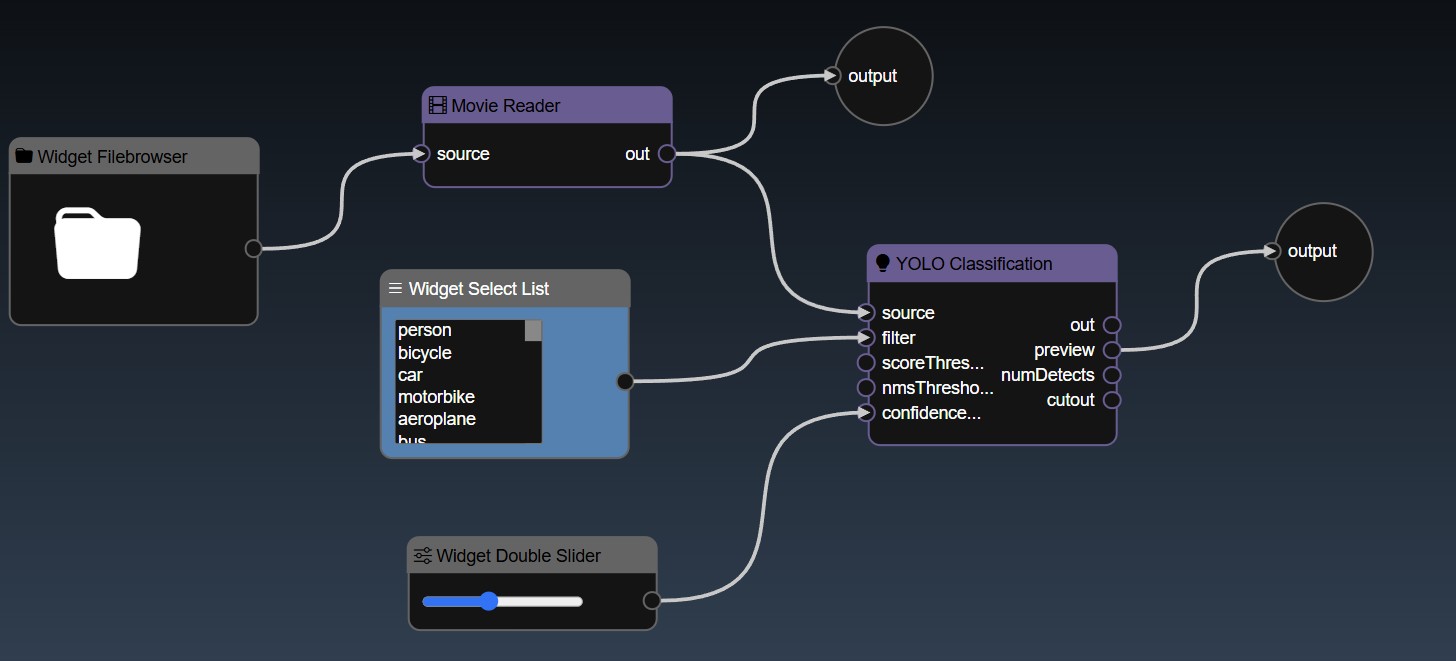

A set of tools, called Widgets, are available that provide user interface controls directly in the flowgraph

These widgets are an easy way to modify the parameters without having to open the Parameter Editor - you can selectively decide which parameters are important enough to add as widgets to the flowgraph. For example, the following flowgraph has a number of widgets added: a "Filebrowser Widget", a "Selection List Widget" and a "Slider Widget" are added to the flowgraph as well as two "Output Widgets":

You can now modify those controls directly in the flowgraph. Furthermore, the widgets can be used in conjunction with the 'Publish' feature. You can refine how the widget will be shown in the Publish view by setting the Widgets parameters - edit the Widget in the Parameter Editor and you can set the widget attributes. Widget attributes include the name which will show in the published view for each widget. Widgets such as Sliders allow you to set their specific attributes such as the minimum, maximum and step value for the Slider widget. All widgets have the common attributes of the name and description (used for tooltips) as well as layouts. The layouts allow you to specify an optional Tab that the widget will be placed in and also the order in which the control will be ordered in the UI (a lower order will allow the control at the top of the layout). An example of the Widget Slider's parameters are as follows:

See the reference section for the full list of Widget Tools

See the section on 'publishing' to understand how you can leverage widgets in published workflows.

A set of tools, called Displays, are available that provide viewing displays directly in the flowgraph This allows you to constantly monitor the output of multiple nodes and avoid switching back and for using the Viewer. For example, the "Thumbnail Image Display" tool shows the results of a the image output of a node:

If you instead want to visualize the full size image rather than the thumbnail, you can use the 'Full Image Display' tool. This shows the image at the actual resolution in pixels in thr flowgraph:

You can also display a matrix (matrix2D) output using the 'Matrix2D Display' tool that shows the results in the form of a table:

DataFrames can also be displayed as tables on the flowgraph using the 'DataFrame Display'. The first few rows of the table are shown, and double clicking the display node will show the other visualizations (such as the statistics and datatypes views):

Tensors can be displayed using the 'Tensor Display'. Double clicking the node will show the other visualizations for the tensor:

Additionally, displays are available for all the other types such as integers, doubles, booleans etc. These displays are useful to get realtime visualization of the various node outputs in your flowgraph:

See the reference section for the full list of Display Tools

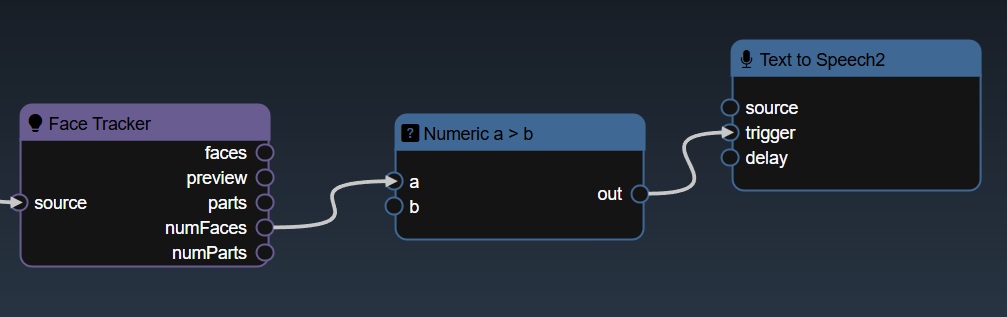

You can create triggers to activate certain nodes that require the trigger to start execution. Typically, you can use the various boolean expression - for example, in the workflow below, the number of detected faces is applied to a "Numeric a>b" tool, this will yield a true value whenever the number of faces is greater than a certain amount. The output of this node is a "trigger" that is used to execute the "Text to Speech" node.

As well as creating triggers automatically based on your outputs of the nodes on your flowgraph, you can also create manual triggers. The Widgets include a "Widget Bool Trigger" and a "Widget Int Trigger". A bool trigger creates a "binary pulse", whereas an int trigger generates a staircase function. Both are useful to manually trigger a node or use one trigger to manually trigger multiple nodes.

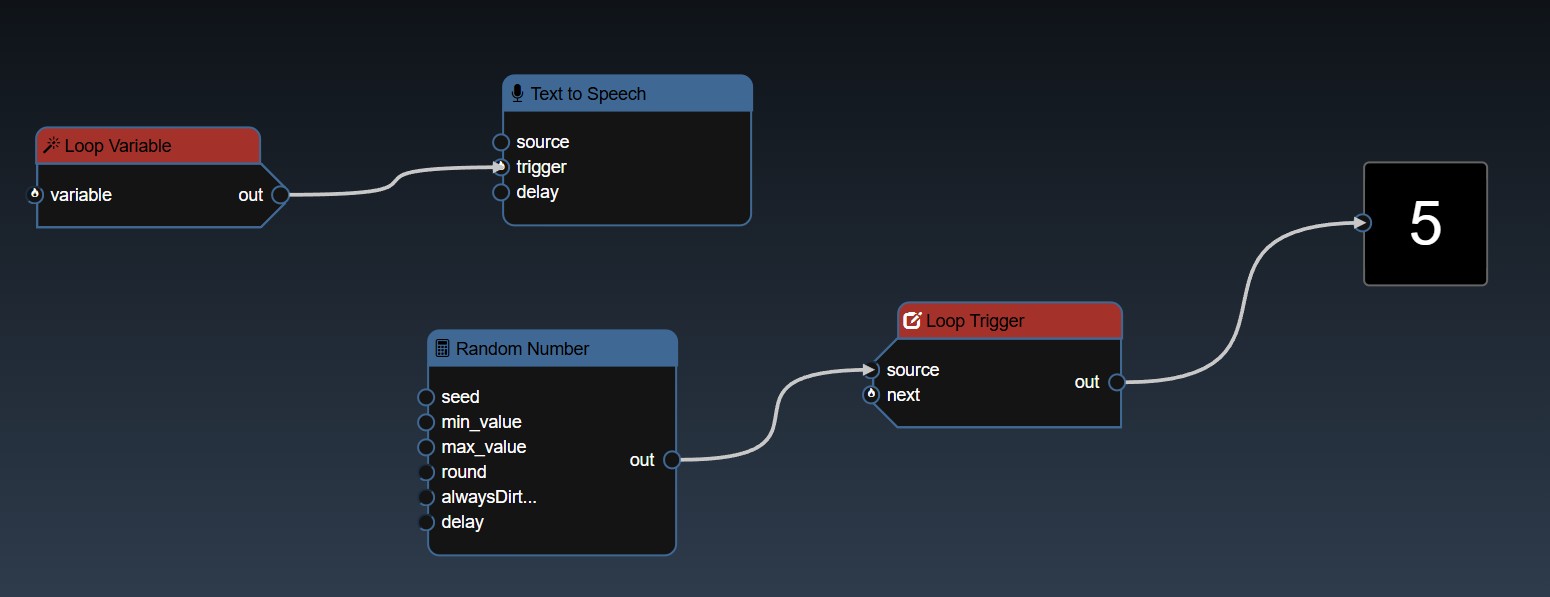

Loop Triggers is allows you to update a Trigger Variable based on when downstream Python nodes have executed and trigger an upstream Python node. This allows you to do "for" loops as the workflow graph is acyclic - meaning no edges can connect a downstream node to an upstream node, so loops are not allowed. However, with this feature you can make a trigger happen upstream when a downstream node is executed. You can add two new nodes, 'Loop Variable' and 'Loop Trigger':

The Loop Trigger when the source has changed (or you click the next trigegr), will use the referenced Loop Variable and will trigger the output of the Loop Variable. The Loop Variable can be placed upstream and flow back to the Loop Trigger, and thus this forms a loop cycle. You can use Loop triggers to perform simulations which may require multiple passes of the workflow nodes.

Infinicam is a high-speed streaming camera capable of capturing and transferring 1.2-megapixel of image data to PC memory at 1,000fps via USB 3.1. Infiniworkflow, on top of all of its many other functionalities, is designed to be a platform for using Infinicam(s) and saving Infinicam footage. There are certain differences between nodes related to Infinicam and most other nodes, so if you will be using an Infinicam, reading through this section is the fastest way to understand everything Infiniworkflow can do with your Infinicam.

The following section will be broken into 3 parts: the Infinicam viewer node and the Infinicam saving nodes.

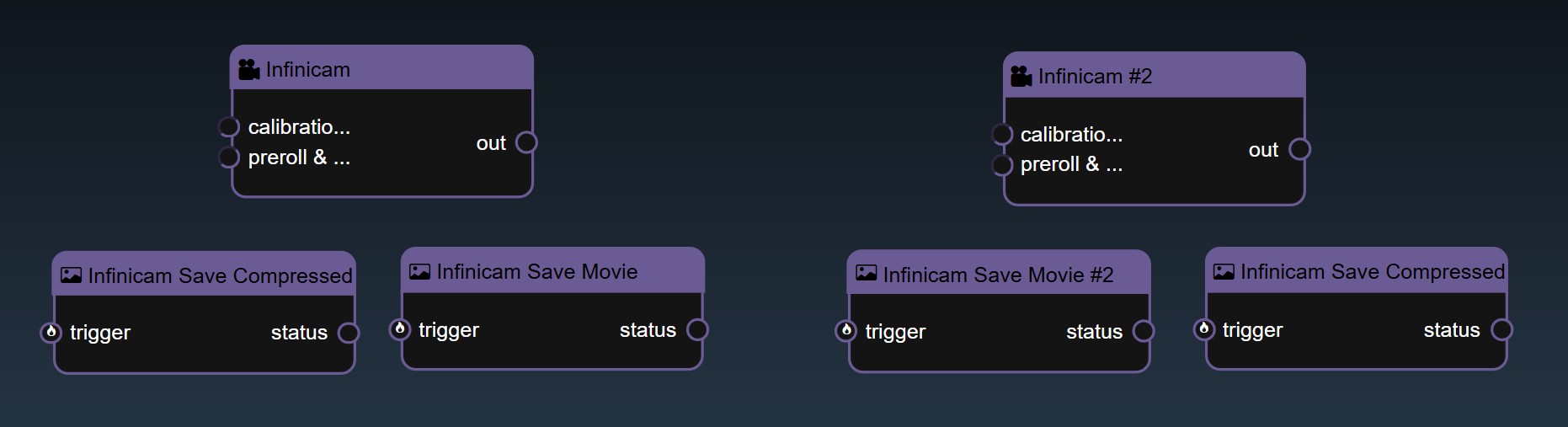

When an Infinicam is plugged in, a node called "Infinicam" will come up. This node allows you to view the Infinicam, and also set the preroll and postroll frames (pre/postroll frames will be discussed later). If multiple Infinicams are connected, each Infinicam will show up as its own node (i.e. "Infinicam", "Infinicam #2", etc). Note that the Infinicam may take a few seconds to open. Also note that this node only allows you to view the Infinicam; saving is done separately.

Infiniworkflow has 2 ways of saving Infinicam footage - "Infinicam Save Movie" and "Infinicam Save Compressed". These 2 saving nodes will come up for each respective Infinicam that is connected to your machine (in other words, if you have 2 Infinicams connected, "Infinicam Save Movie" and "Infinicam Save Compressed" save footage from the first Infinicam, and "Infinicam Save Movie #2" and "Infinicam Save Compressed #2" save footage from the second Infinicam). Note that these saving nodes do not need to be connected to the "Infinicam" viewer node itself; all that is required is that the Trigger is clicked.

The "Infinicam Save Movie" node, upon hitting the Trigger, saves footage from the selected Infinicam in any file type (.MP4, .MDAT, etc.) and to any file location. The total number of frames of Infinicam footafe that will be saved by this node when the Trigger is clicked is based on your Infinicam's pre-roll and post-roll number of frames. To explain what these terms means, consider the following example: you wish to save footage whenever an object falls off a conveyor belt in a factory. You have a workflow that will set a Trigger to True as soon as it detects that an object has just begun to fall off the belt. To understand why objects sometimes fall off the belt, you want to save the 2000 frames of footage from before the moment the object begins falling, as well as 1000 frames of footage after that point for good measure. Thus, you will set your pre-roll to 2000, and your post-roll to 1000. When Infinicam Save Movie node is Triggered, a total of 3000 frames will be saved precisely as you want them to.

The "Infinicam Save Movie" node tends to be slower, as it needs to compress and decompress data on the fly. The "Infinicam Save Compressed" node, on the other hand, saves out compressed images, which means that the footage gets saved to your computer faster and is more informationally dense (a single 2 second video can be a few hundred megabytes). Whereas the prior node allows users to select the Codec and the File format, the "Infinicam Save Compressed" node hardcodes both, so 2 files are always returned: a MDAT file of the footage itself and a CIH file of the footage metadata.

Note for both saving nodes: if the Trigger has already been pressed and you wish to stop saving (i.e. save a shorter clip of footage), you can simply click the Trigger again to immediately save out all frames already gathered to your machine.

If you wish to view the footage that is saved from the "Infinicam Save Compressed" node, use the "Infinicam Movie Reader" node, which reads MDAT/CIH files.

Important note: by default, when "Infinicam Save Compressed" is Triggered, the number of frames that will be saved will be equal to the Infinicam's preroll plus postroll. However, if you wish to save out Infinicam footage continually, you can click the checkbox in the "Infinicam Save Compressed" editing menu for "Constant Saving". When true, you may set the maximum file size you wish for the saved Infinicam footage. When the node is Triggered now, footage will continue to save into the file you created until the maximum file size limit has been reached.

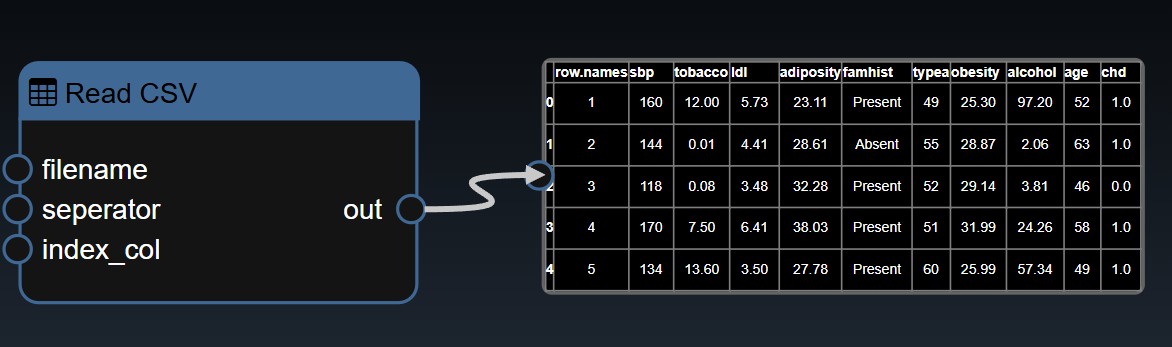

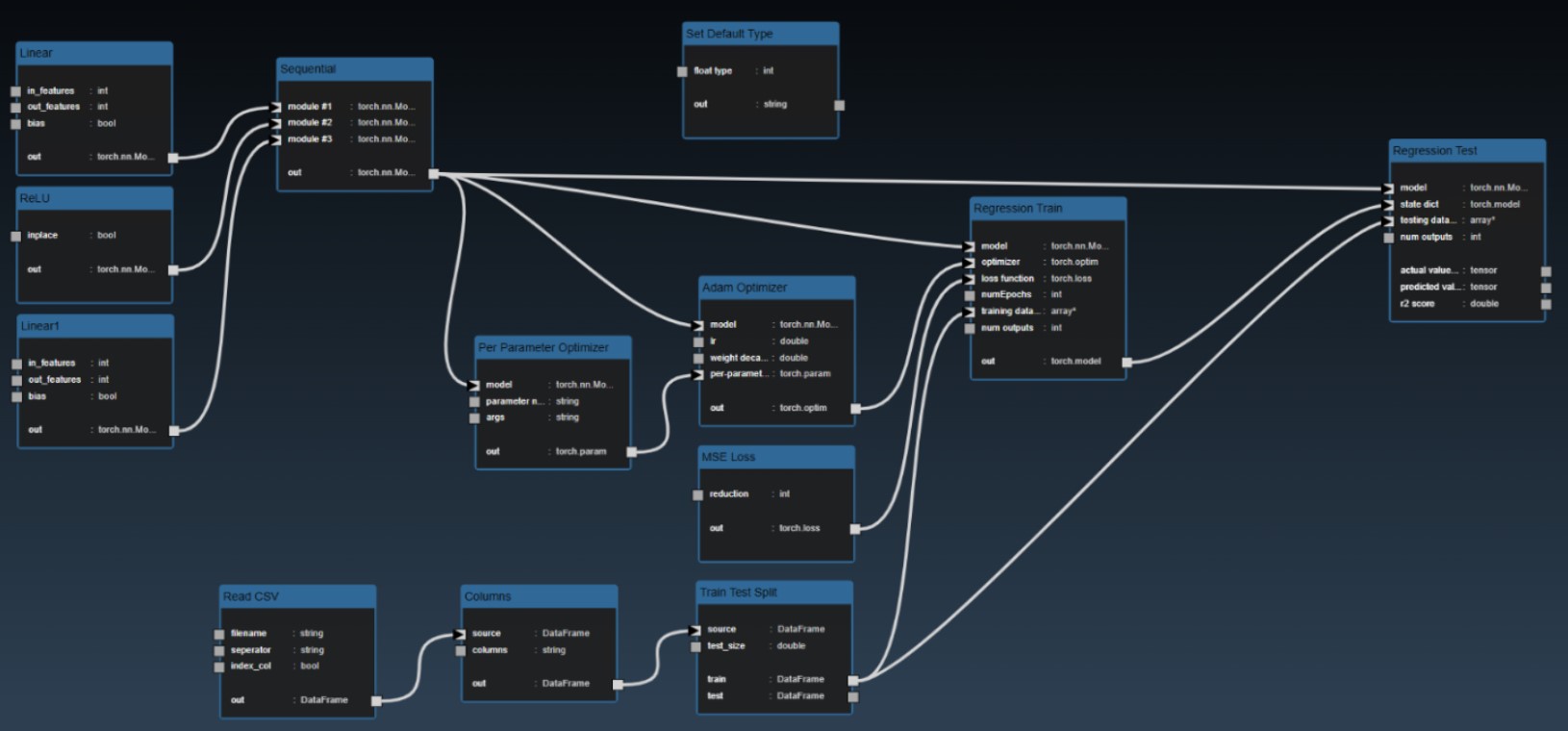

The Data Science tools are all under the Data Frame category. The implementation is based on Pandas, an open source data analysis and manipulation library. A DataFrame can be loaded with the "Read CSV" or "Read Excel" tools or created programmatically with the "Random Table" tool or converting from numpy or tensors. For many of the tools, they will use "Column" or "Columns" properties representing a choice of a single column or a subset of columns. Some of the tools also have an "arg" property which is a map parameter that allows you to pass in additional key/value pair optional arguments The Key/Value Dialog UI will show the corresponding Pandas function's documentation which is useful to determine the additional parameters you wish to set.

See the reference section for the full list of Data Science Tools

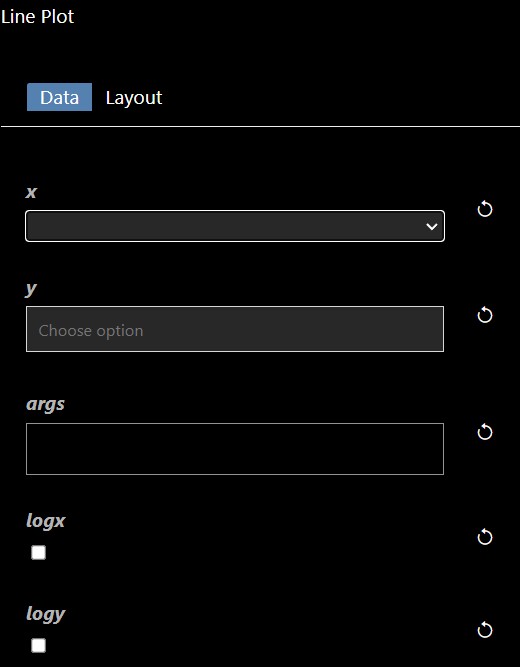

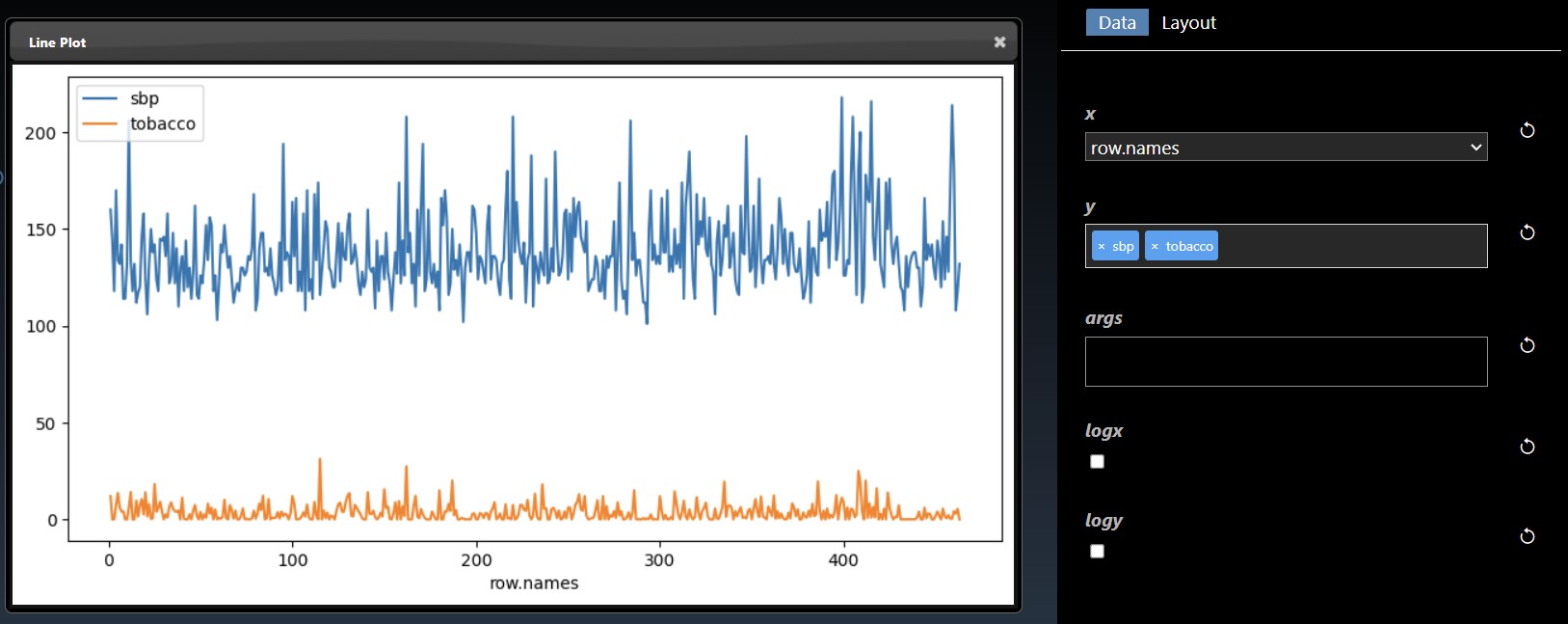

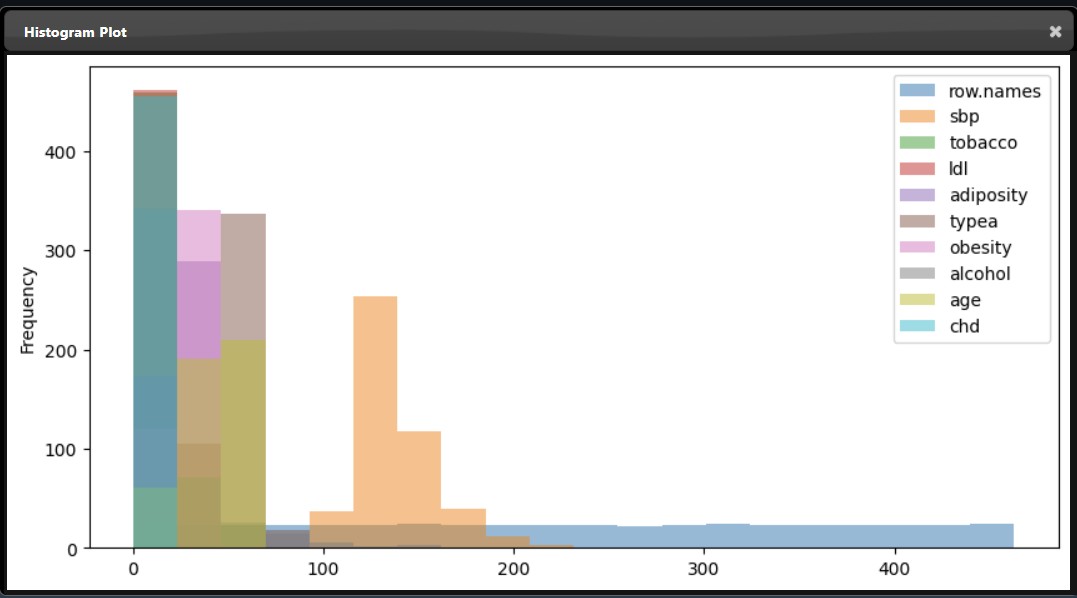

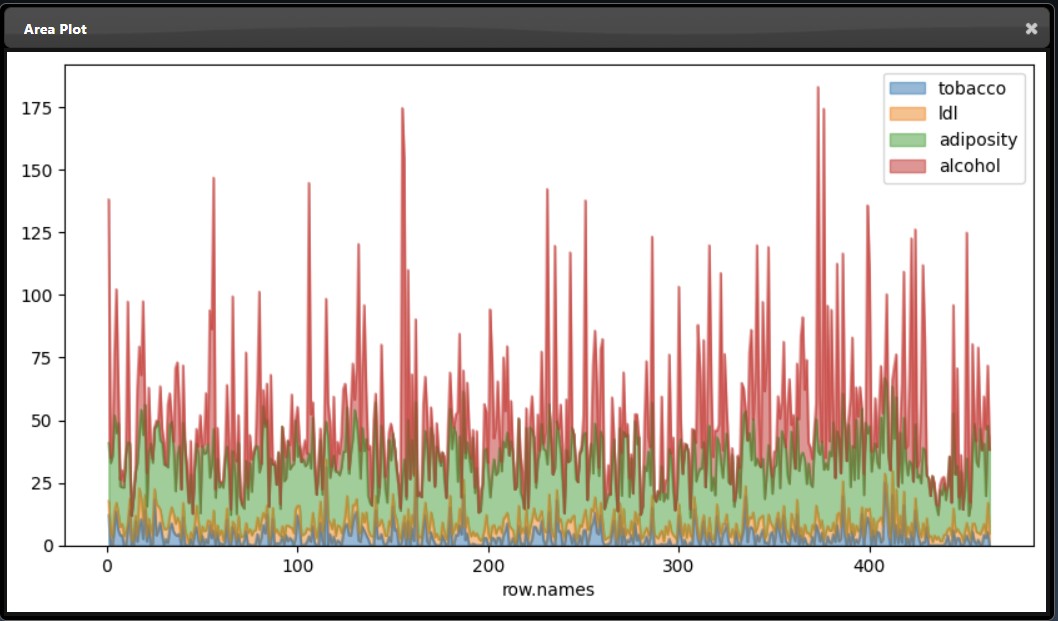

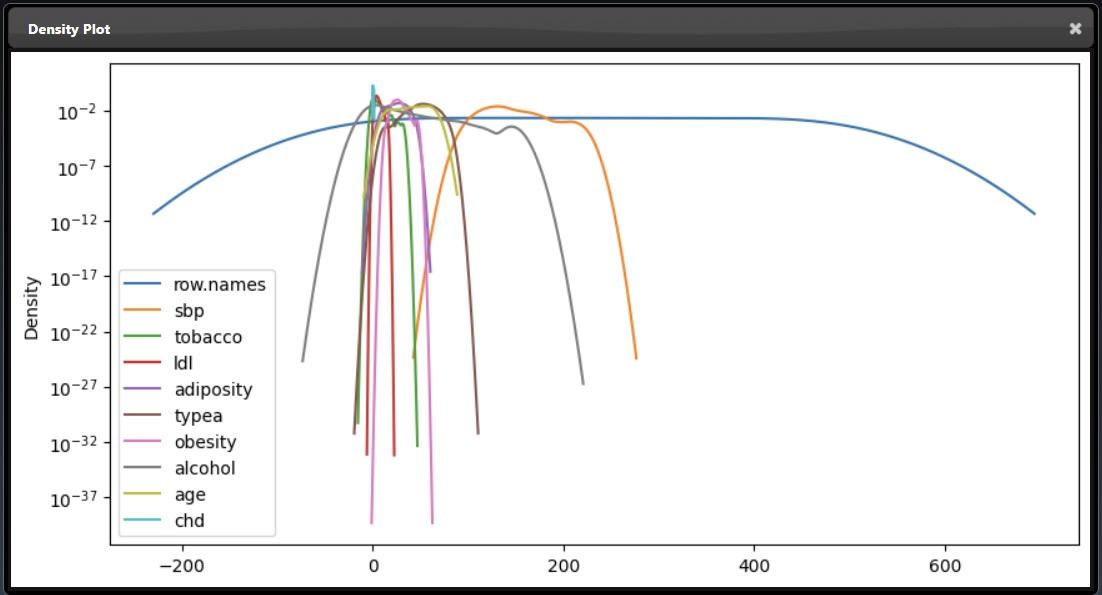

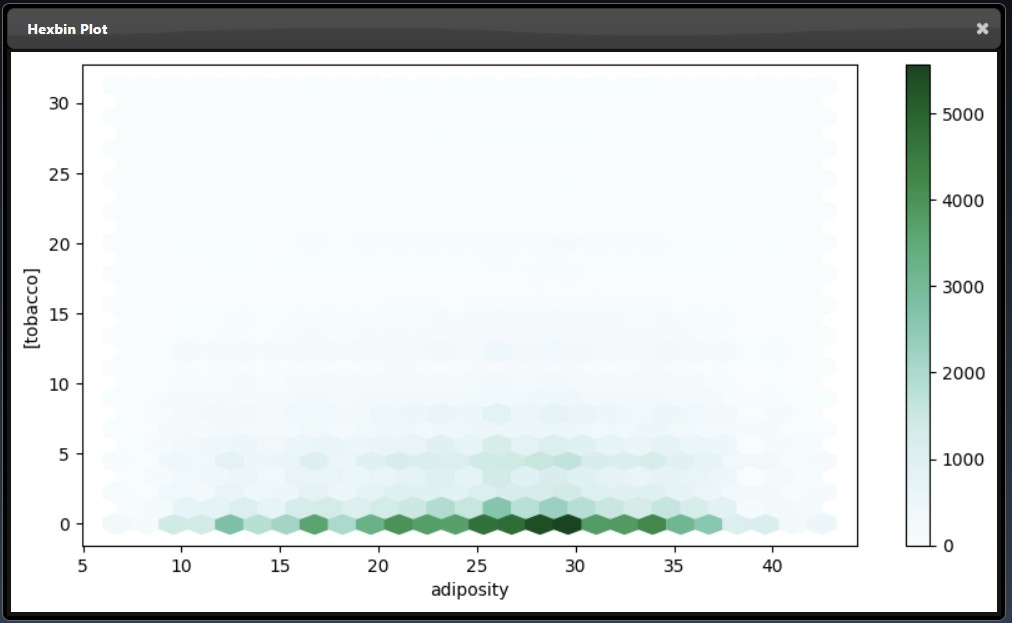

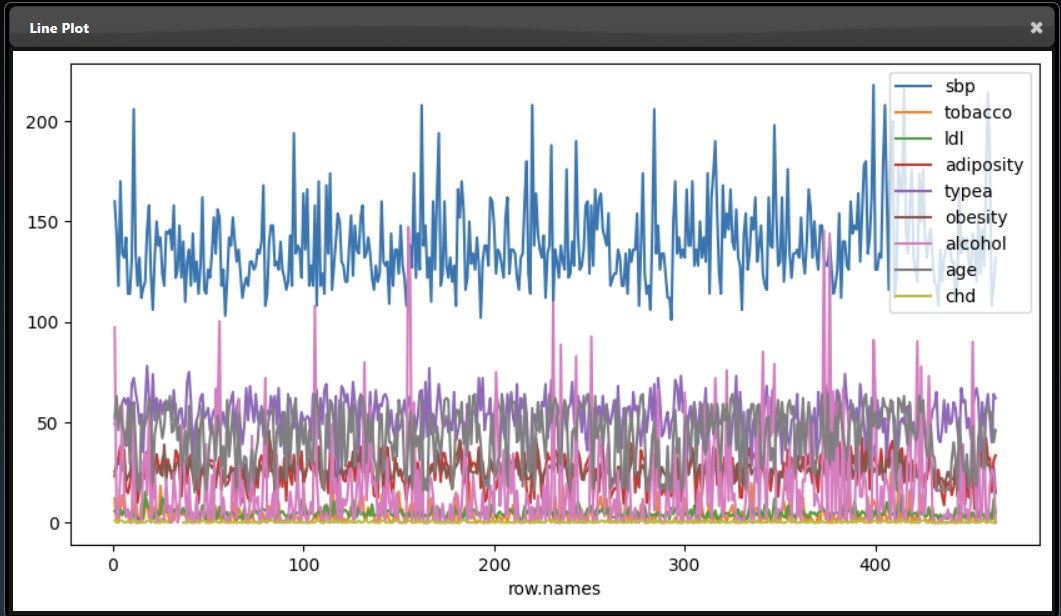

A number of tools are available to create charts for DataFrames. These tools are all under the Plot category. Each plot tool has parameters placed into two different tabs: Data and Layout. The Data parameters allow you to set the columns you wish to plot and the Layout parameters allow you to adjust the title of the chart etc. For example, the "Line Plot" tool has the following Data parameters:

The X and Y allow you to set the columns you wish for the X and Y-axis. If no columns are set for the Y-axis then the plot will include all numerical columns in the DataFrame. If the X parameter is not set then the index of the DataFrame will be used as the X-axis. In the example, below two columns (sbp and tobacco) are plotted for Y against the "row.names" column:

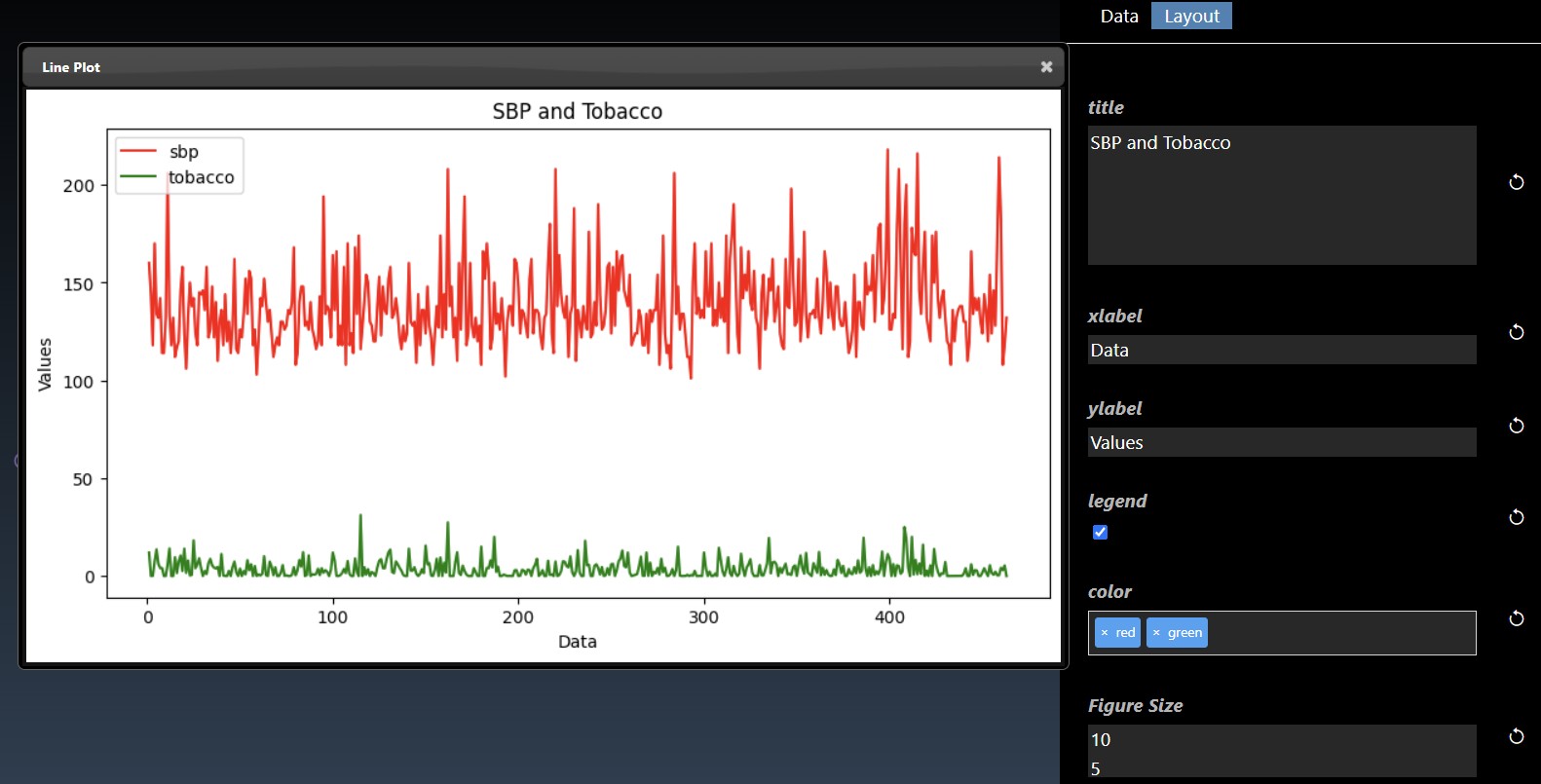

The Layout tab allows you to specify the title for the chart as well as the labels for the axis. You can also hide or show the Legend and set the size of the figure in inches. The "color" parameter is a multi-selection list parameter that you can set colors such as "red", or #6580ab etc. If you have two Y columns you plot then if you set one color both line charts will use the same color but if you set two colors in the list then you can distinguish each line chart.

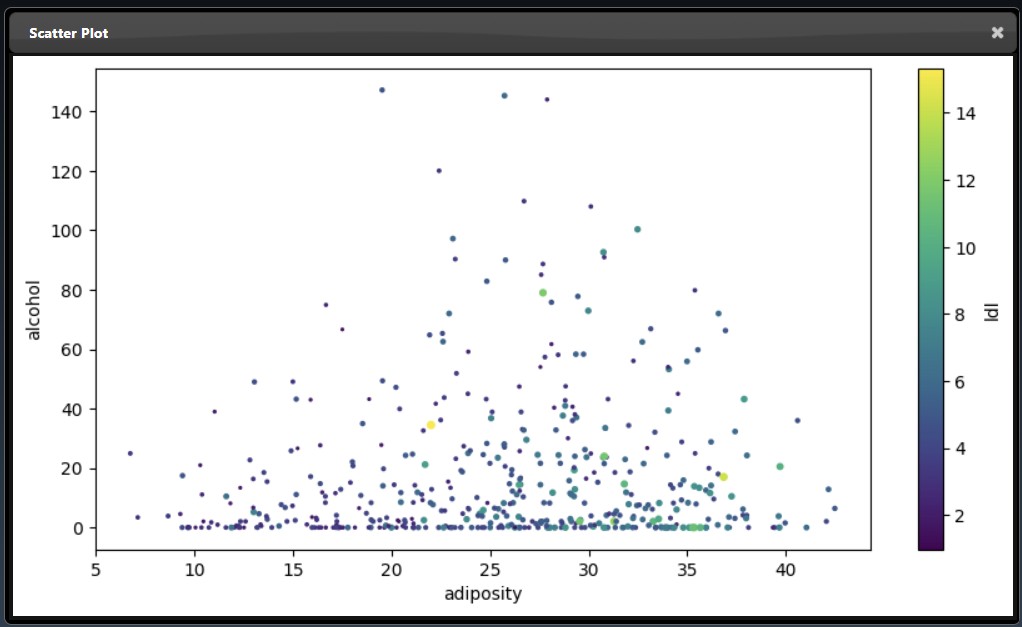

An subset of the plot tool visualizations are as follows:

|

|

|

|

|

|

See the reference section for the full list of Plot Tools

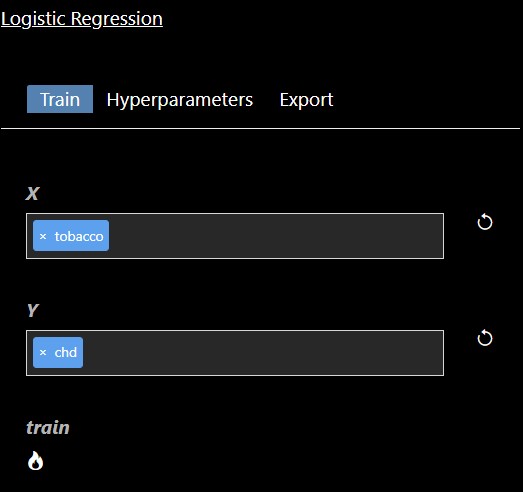

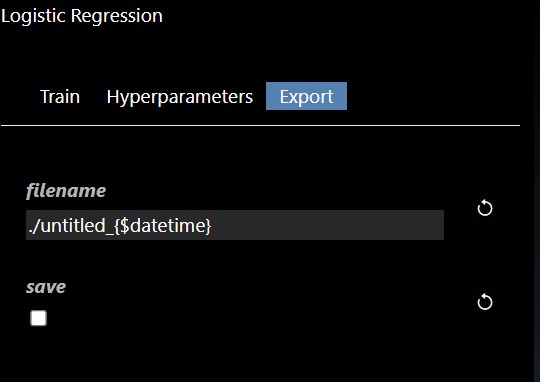

The Machine Learning filters are based using scikit-learn Python module are all under the ML category. Each ML tool has 3 tabs: Train, Hyperparameters and Export. The Train parameters allow you to set the X and Y columns as well as a trigger to start the training. As training can be slow so a trigger is used to start the process, however, when doing a grid search, the trigger is automatically generated. An example of the training parameters for the 'Logistic Regression' ML Tool is shown as follows:

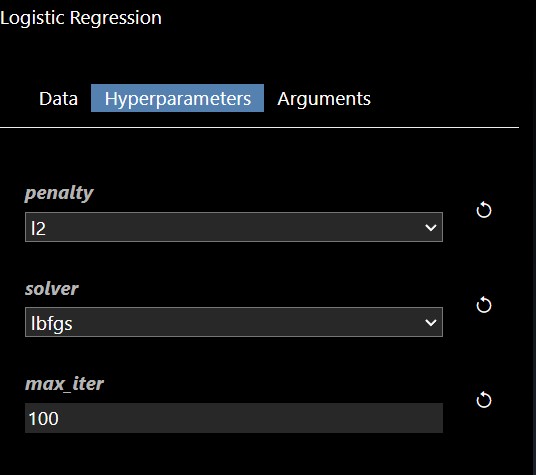

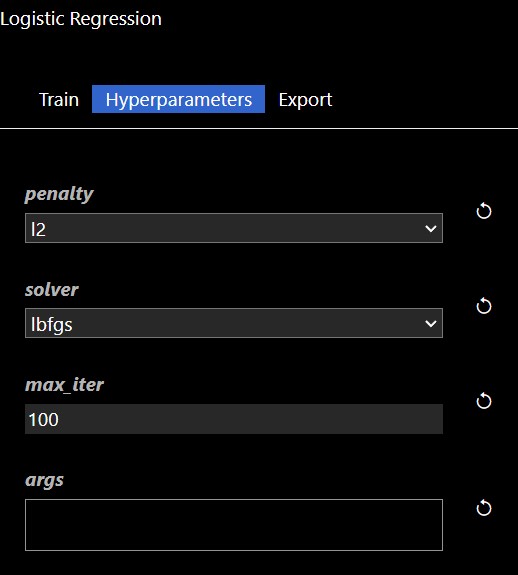

In this scenerio, we are training a model based on the tobacco column to predicy heart disease (chd). Clicking the "train" trigger will start the process of fitting the data to create a ML model. The Hyperparameters tab has the specific hyperparameters that allow customization and tuning of the model. The hyperparameters for the logistic regression tool are as follows:

Each ML training tool will have a different set of hyperparameters and these will show up in the Grid Search dialog. Additionally, an "arg" map parameter is also included which allows you to set any parameters that are not in the UI - this is a map of key/values pairs. After clicking the "arg" widget, the Key/Value dialog appears that shows the documentation of the ML model, this is useful so you can review any additional parameters you may wish to set:

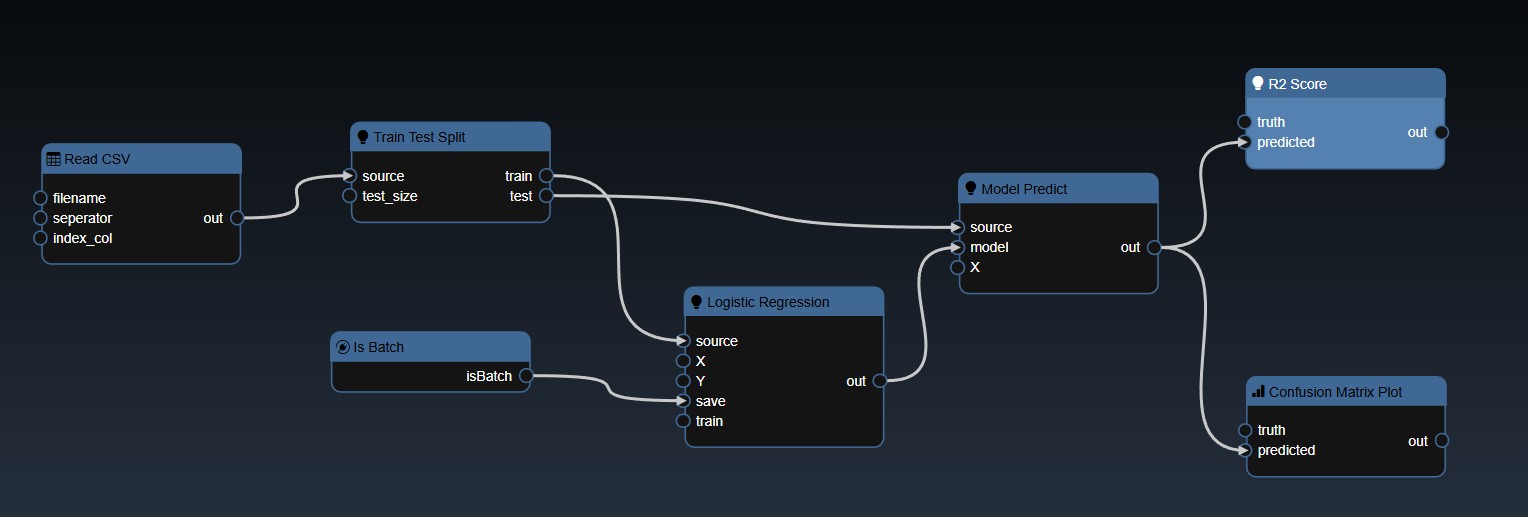

The Export tab allows you to set whether you want to save the model to a file, by default models will not be saved but it is recommended to save your models whenever you have complex models that take time to execute. A common practice when doing grid search is to connect the "Is Batch" Tool to the "save" input parameter of the model - this will always be true when a grid search is done in a background batch process - thus, the models will be saved during the grid search process.

The typical approach to building models involves splitting your training data into test and train splits. The following workflow illustrates the steps involved and the nodes required to implement the training:

The CSV file is read and then a test train split is done, the training table is then passed to the ML model. In this case the "Is Batch" Tool is used to set the "save" parameter which will automatically save the model for any Grid Search. The output of the model is then passed to a model predict and the predicted values can be compared against the ground truth to establish the accuracy of the model. In this scenerio, we use a confusion matrix to plot the accuracy of the results. And a ML metric nodes such as "R2 Score" allow you to see the accuracy and it can be further used to initiate a Grid Search.

See the reference section for the full list of ML Tools

The AI Inference algorithms including using pretrained models tools are all under the AI category. The full list of display tools is as follows:

| Name | Icon | Inputs | Outputs | Description |

|---|---|---|---|---|

| Body Pose COCO |

|

| Body Pose COCO detection using CAFFE model The following package is required: pose |

|

| Body Pose MPI |

|

| Body Pose MPI detection using CAFFE model The following package is required: pose |

|

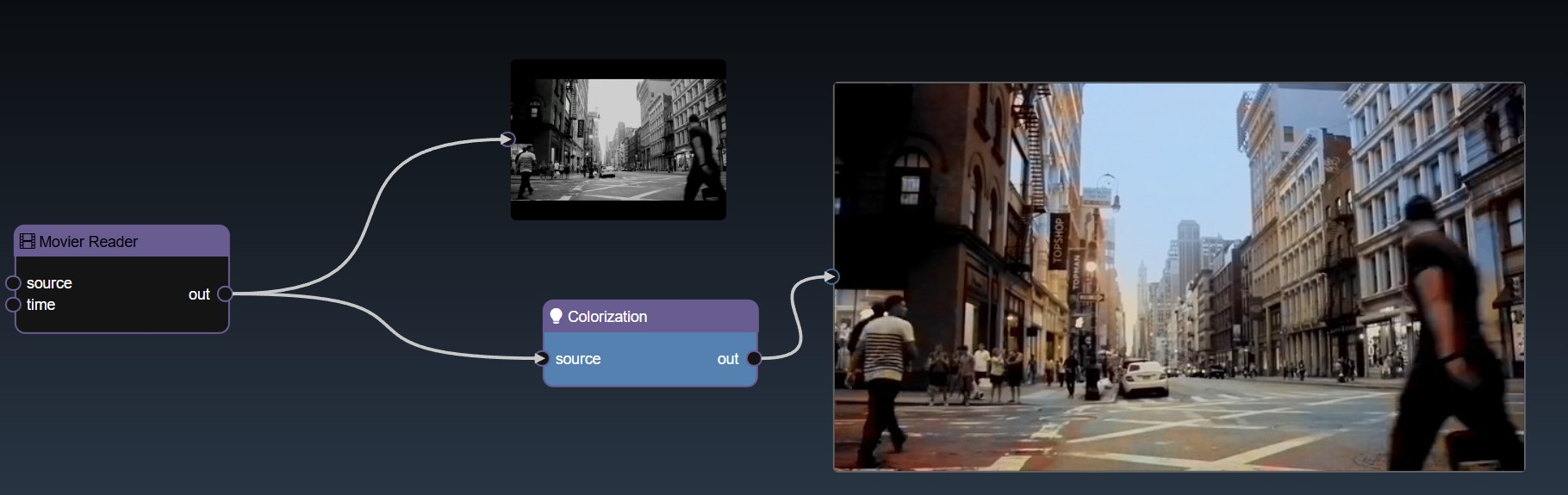

| Colorization |

|

| Colorization The following package is required: colorization |

|

| Depth Inference |

|

| Detects depth using ONNX model The following package is required: midas |

|

| Holistically-Nested Edges |

|

| Detects edges using CAFFE model The following package is required: edge |

|

| Dexined Edge Detect |

|

| Detects edges using ONNX model The following package is required: dexined |

|

| YuNET Face Detect |

|

| Detect faces using YuNET ONNX model. This model can detect faces of pixels between around 10x10 to 300x300 due to the training scheme. The following package is required: yunet |

|

| Face Tracker |

|

| Detects and tracks facial and body features The following package is required: haarcascades |

|

| YuNET Facial Expression |

|

| Detect facial expressions using YuNET ONNX model: angry, disgust, fearful, happy, neutral, sad, surprised. The following package is required: yunet |

|

| Handpose Estimation |

|

| Detects palms and fingers based on OpenPose neural network model. In out, the 1st column is the id of the point, the 2nd and 3rd are the coordinates of that point, and the 4th column is the confidence. The following package is required: pose |

|

| Human Parsing Inference |

|

| Parses (segments) human body parts from an image using opencv's dnn The following package is required: human |

|

| Human Segmentation Inference |

|

| Perform segmentation on humans using PPHumanSeg model. The following package is required: human_segmentation |

|

| Mask Inference |

|

| Mask labels objects based on RCNN neural network model The following package is required: mask_rcnn |

|

| ONNX for Basic Classification |

|

| Performs basic Classification using custom ONNX model The following package is required: onnx_runtime_windows |

|

| ONNX for Basic Segmentation |

|

| Performs basic Segmentation using custom ONNX model The following package is required: onnx_runtime_windows |

|

| ONNX for Regression |

|

| Performs Regression using custom ONNX model The following package is required: onnx_runtime_windows |

|

| Onnx Runtime Classification |

|

| Onnx Runtime Inference for Classifications The following package is required: onnx_runtime_windows |

|

| Onnx Runtime YOLOX |

|

| Onnx Runtime Inference for YOLOX The following package is required: onnx_runtime_windows |

|

| Person ReID |

|

| Matches a person's identity across different cameras or locations in a video or image sequence using features such as appearance, body shape, and clothing to match their identity in different frames The following package is required: personReiD |

|

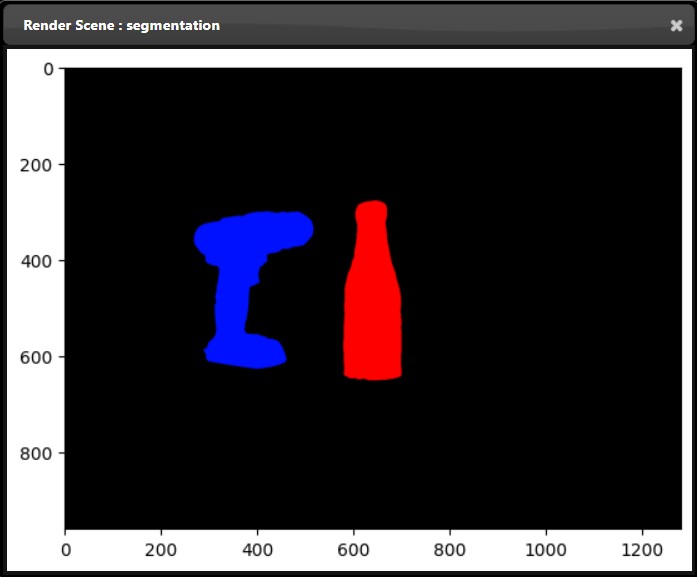

| Segmentation |

|

| Parses (segments) various objects from an image using opencv's dnn The following package is required: segmentation |

|

| Speech Recognition |

|

| Detects speech | |

| Text Spotting |

|

| Spots text in images using DNN The following package is required: text_spotting |

|

| YOLO3 Classification |

|

| Detects and labels objects based on YOLO neural network model The following package is required: custom_yolo3 |

|

| YOLO5 Classification |

|

| Detects and labels objects based on YOLO5 neural network model The following package is required: yolo |

|

| YOLOX Inference |

|

| YOLOX is a high-performing object detector The following package is required: yolox_inference |

The Audio filters tools are all under the Audio category. The full list of display tools is as follows:

| Name | Icon | Inputs | Outputs | Description |

|---|---|---|---|---|

| Amplify Audio |

|

| Makes audio louder or quieter The following package is required: audio |

|

| Bandpass Audio |

|

| Filters out low and high frequencies The following package is required: audio |

|

| Classify Audio |

|

| Outputs the sounds detected and their confidence scores The following package is required: audio_classify |

|

| Concat Audio |

|

| Combines audio files and saves it The following package is required: audio |

|

| Fade Audio |

|

| Fades into and out of audio The following package is required: audio |

|

| Frequency Audio |

|

| Returns min, max, average, and harmonic volume of audio The following package is required: audio |

|

| Highpass Audio |

|

| Filters out low frequencies The following package is required: audio |

|

| Input Audio |

| Streams audio from microphone The following package is required: audio |

||

| Length Audio |

|

| Returns length of audio The following package is required: audio |

|

| Lowpass Audio |

|

| Filters out high frequencies The following package is required: audio |

|

| Output Audio |

|

| Plays audio stream The following package is required: audio |

|

| Play Audio |

|

| Plays Audio from an audio object The following package is required: audio |

|

| PYIN Audio |

|

| Uses the probabilistic YIN algorithm to return fundamental frequency of audio The following package is required: audio |

|

| Read Audio |

|

| Reads Audio from a file The following package is required: audio |

|

| Reverse Audio |

|

| Reverses audio The following package is required: audio |

|

| Save Audio |

|

| Saves Audio to a file The following package is required: audio |

|

| Slice Audio |

|

| Trims audio file and saves it The following package is required: audio |

|

| Variable Speed Audio |

|

| Plays audio faster or slower The following package is required: audio |

|

| Volume Audio |

|

| Returns min, max, and average volume of audio The following package is required: audio |

The Color Correction tools are all under the Color category. The full list of display tools is as follows:

| Name | Icon | Inputs | Outputs | Description |

|---|---|---|---|---|

| Brightness |

|

| Change the Brightness thorugh a Look Up Table (L.U.T.) for a Colored Image | |

| CLAHE Histogram Equalization |

|

| CLAHE, or Contrast Limited Adaptive Histogram Equalization, is an image processing technique used to enhance the local contrast of images, best used when the overall image contrast is low or uneven. | |

| Contrast |

|

| Change the Contrast thorugh a Look Up Table (L.U.T.) for a Colored Image | |

| Color Curve |

|

| Create a Curve Mask Thorugh a Look Up Table (L.U.T.) for a Colored Image | |

| Convert Colorspace |

|

| Convert Colorspace | |

| BGR->YUV |

|

| BGR to YUV of Cuda Buffer | |

| Brightness |

|

| Change Brightness of Cuda Buffer | |

| Contrast |

|

| Change Contrast of Cuda Buffer | |

| Crop |

|

| Crop input image | |

| Gamma |

|

| Change Gamma of Cuda Buffer | |

| Gamma Fwd |

|

| Gamma Fwd of Cuda Buffer | |

| Gamma Inv |

|

| Gamma Inv Cuda Buffer | |

| HLS->RGB |

|

| HLS to RGB of Cuda Buffer | |

| HSL Correct |

|

| Modify Color of Cuda Buffer Using HSL Sliders | |

| HSV->RGB |

|

| HSV to RGB of Cuda Buffer | |

| HSV Correct |

|

| Modify Color of Cuda Buffer Using HSV/HSB Sliders | |

| Invert |

|

| Inverts RGB channels of Cuda Buffer | |

| Levels |

|

| Smoothstep leveling of Cuda Buffer Using Gamma Function | |

| Lift |

|

| Change Lift of Cuda Buffer | |

| RGB->HLS |

|

| RGB to HLS of Cuda Buffer | |

| RGB->HSV |

|

| RGB to HSV of Cuda Buffer | |

| RGB->YUV |

|

| RGB to YUV of Cuda Buffer | |

| Smoothstep |

|

| Smoothstep of Cuda Buffer | |

| YUV->BGR |

|

| YUV to BGR of Cuda Buffer | |

| YUV->RGB |

|

| YUV to RGB of Cuda Buffer | |

| Debayer |

|

| Debayer | |

| Histogram Equalization |

|

| Histogram Equalization | |

| Gamma |

|

| Change the Gamma thorugh a Look Up Table (L.U.T.) for a Colored Image | |

| HSL->HSV |

|

| Convert Colorspace | |

| HSL->RGB |

|

| Convert Colorspace | |

| HSV->RGB |

|

| Convert Colorspace | |

| Image2D to Matrix2D |

|

| Convert Image2D to Matrix2D | |

| Invert Color |

|

| Invert Color Using Bitwise Not | |

| Levels |

|

| In/Out Black and White and Gamma Levels | |

| Color Lift |

|

| Lifts the Brightness thorugh a Look Up Table (L.U.T.) for a Colored Image | |

| Matrix2D to Image2D |

|

| Convert Matrix2D to Image2D | |

| RGB->HSL |

|

| Convert Colorspace | |

| RGB->HSV |

|

| Convert Colorspace | |

| RGB->YUV |

|

| Convert Colorspace | |

| Smoothstep |

|

| Smoothstep to set in and out black levels | |

| YUV->HSV |

|

| Convert Colorspace | |

| YUV->RGB |

|

| Convert Colorspace |

The Combine and Split Images tools are all under the Composite category. The full list of display tools is as follows:

| Name | Icon | Inputs | Outputs | Description |

|---|---|---|---|---|

| Absolute Difference |

|

| Absolute Difference Operations on Two Images | |

| Add |

|

| Add Operations on Two Images | |

| Bitwise And |

|

| Bitwise And Operations on Two Images | |

| Binary |

|

| Binary Operations on Two Images | |

| Add |

|

| Composite with Add blend mode | |

| Average |

|

| Composite with Average blend mode | |

| Blend |

|

| Change Blend of Cuda Buffer | |

| Color Burn |

|

| Composite with Color Burn blend mode | |

| Color Dodge |

|

| Composite with Color Dodge blend mode | |

| Darken |

|

| Composite with Darken blend mode | |

| Difference |

|

| Composite with Difference blend mode | |

| Exclusion |

|

| Composite with Exclusion blend mode | |

| Glow |

|

| Composite with Glow blend mode | |

| Hard Light |

|

| Composite with Hard Light blend mode | |

| Hard Mix |

|

| Composite with Hard Mix blend mode | |

| Lighten |

|

| Composite with Lighten blend mode | |

| Linear Burn |

|

| Composite with Linear Burn blend mode | |

| Linear Dodge |

|

| Composite with Linear Dodge blend mode | |

| Linear Light |

|

| Composite with Linear Light blend mode | |

| Multiply |

|

| Composite with Multiply blend mode | |

| Negation |

|

| Composite with Negation blend mode | |

| Normal |

|

| Composite with Normal blend mode | |

| Overlay |

|

| Composite with Overlay blend mode | |

| Phoenix |

|

| Composite with Phoenix blend mode | |

| Pin Light |

|

| Composite with Pin Light blend mode | |

| Reflect |

|

| Composite with Reflect blend mode | |

| Screen |

|

| Composite with Screen blend mode | |

| Soft Light |

|

| Composite with Soft Light blend mode | |

| Subtract |

|

| Composite with Subtract blend mode | |

| Vivid Light |

|

| Composite with Vivid Light blend mode | |

| Divide |

|

| Divide Operations on Two Images | |

| Draw Circles |

|

| Draws Circles | |

| Draw Contours |

|

| Draws contours outlines or filled contours | |

| Draw Lines |

|

| Draws Lines | |

| Draw Paths |

|

| Draws Paths | |

| Draw Rectangles |

|

| Draws Rectangles | |

| Draw Shapes |

|

| Draws Lines, Circles, and/or Rectangles | |

| Draw Text |

|

| Draws Text String | |

| Extract Channel |

|

| Extract One Channel | |

| Horizontal Combine |

|

| Horizontally Combine Two Images | |

| Maximum |

|

| Maximum Operations on Two Images | |

| Merge |

|

| Merges inputs into one channel. | |

| Minimum |

|

| Minimum Operations on Two Images | |

| Multiply |

|

| Multiply Operations on Two Images | |

| Bitwise Not |

|

| Inverts every bit of an array | |

| Bitwise Or |

|

| Bitwise Or And Operations on Two Images | |

| Split |

|

| Splits image into individual channels. | |

| Per Element Sqrt |

|

| Calculates a square root of array elements | |

| Subtract |

|

| Subtract Operations on Two Images | |

| Switch Image2D |

|

| Outputs one of the selected inputs | |

| Vertical Combine |

|

| Vertically Combine Two Images | |

| Bitwise XOR |

|

| Bitwise XOR Operations on Two Images |

The Database filters tools are all under the Database category. The full list of display tools is as follows:

| Name | Icon | Inputs | Outputs | Description |

|---|---|---|---|---|

| Store Row to Database |

|

| Store Row to Database The following package is required: database |

|

| Connect Generic Database |

|

| Connect to a database The following package is required: database |

|

| Connect MySQL |

|

| Connect to a MySQL database The following package is required: database |

|

| Connect Oracle |

|

| Connect to a Oracle database The following package is required: database |

|

| Connect PostgreSQL |

|

| Connect to a PostgreSQL database The following package is required: database |

|

| Connect SQLite |

|

| Connect to a SQLite database The following package is required: database |

|

| Connect Teradata |

|

| Connect to a Teradara database The following package is required: database |

|

| Get Table Names |

|

| Get names of all tables in Database The following package is required: database |

|

| Query Database |

|

| Query Database table The following package is required: database |

|

| Read Database Chunk |

|

| Read tables from Database in chunks The following package is required: database |

|

| Read Database Table |

|

| Read Database table The following package is required: database |

|

| Store Table to Database |

|

| Store Table to Database The following package is required: database |

The Datascience filters tools are all under the Datascience category. The full list of display tools is as follows:

| Name | Icon | Inputs | Outputs | Description |

|---|---|---|---|---|

| Bool Cell |

|

| Get bool cell value. | |

| Columns |

|

| Returns a subset of columns | |

| Columns Table |

|

| Returns the columns of the table | |

| Count Table |

|

| Returns the count of the table | |

| Group By Count Table |

|

| Returns the table with grouped count | |

| Double Cell |

|

| Get double cell value. | |

| Drop Columns Table |

|

| Returns the table with some columns dropped | |

| Drop Nan Columns |

|

| Drop any columns with Not a Number | |

| Drop Nan Rows |

|

| Drop any rows with Not a Number | |

| Drop Rows Table |

|

| Returns the table with some rows dropped | |

| Fill Nan Columns |

|

| Fill any columns with Not a Number | |

| Fill Nan Rows |

|

| Fills any rows with Not a Number | |

| Index Location Table |

|

| Integer-location based indexing for selection by position. | |

| Int Cell |

|

| Get int cell value. | |

| Join Table |

|

| Join Two Tables | |

| Export Matrix2D |

| Exports CSV file from Matrix2D | ||

| Max Table |

|

| Returns the max of the table | |

| Group By Max Table |

|

| Returns the table with grouped max | |

| Mean Table |

|

| Returns the mean of the table | |

| Group By Mean Table |

|

| Returns the table with grouped mean | |

| Merge Table |

|

| Merges Two Tables | |

| Min Table |

|

| Returns the min of the table | |

| Group By Min Table |

|

| Returns the table with grouped min | |

| Numpy To Table |

|

| Converts numpy to dataframe | |

| One Hot Encoding |

|

| Reflect the DataFrame over its main diagonal by writing rows as columns and vice-versa | |

| Random Table |

|

| Return random table | |

| Read CSV |

|

| Read CSV file into Panda Table | |

| Read Excel |

|

| Read Excel into Panda Table | |

| Sample Table |

|

| Returns the sampled table | |

| Set Table |

|

| Set values in table | |

| Table Shape |

|

| Returns the number of rows and columns | |

| Sort Columns |

|

| Sort Columns | |

| Sort Rows |

|

| Sort Rows | |

| STD Table |

|

| Returns the standard deviation of the table | |

| Group By STD Table |

|

| Returns the table with grouped standard deviations | |

| String Cell |

|

| Get string cell value. | |

| Sum Table |

|

| Returns the sum of the table | |

| Group By Sum Table |

|

| Returns the table with grouped sums | |

| Export CSV |

| Exports CSV file from Panda Table | ||

| Transpose Table |

|

| Reflect the DataFrame over its main diagonal by writing rows as columns and vice-versa | |

| Value Counts Table |

|

| Returns the number of unique rows of the table | |

| Where Table |

|

| Returns the table after a query is performed |

The Experimental Tools tools are all under the Experimental category. The full list of display tools is as follows:

| Name | Icon | Inputs | Outputs | Description |

|---|---|---|---|---|

| Abs Subtraction Shaders |

|

| Find Absolute Value of Difference Between 2 Images using a GPU Shader | |

| Add Shaders |

|

| Add 2 Images Together using a GPU Shader | |

| Beams Shader |

|

| Applies Beam Rendering | |

| Brightness Shader |

|

| Change the Brightness using a GPU Shader | |

| Clouds Shader |

|

| Applies Cloud Rendering | |

| Contrast Shader |

|

| Change the Contrast using a GPU Shader | |

| Dissolve Shaders |

|

| Dissolve 2 Images Together using a GPU Shader | |

| Texture Download |

|

| Downloads to CPU System Memory from GPU Texture Memory | |

| Flip Shader |

|

| Flips horizontal/vertical | |

| Gamma Shader |

|

| Change the Gamma using a GPU Shader | |

| Geo Api |

|

| Geo Api The following package is required: geopy |

|

| Grayscale Shader |

|

| Change a Color Texture to Grayscale using a GPU Shader | |

| Horizontal Ramp |

|

| Change Color Texture with Vertical Ramp using a GPU Shader | |

| Invert Shader |

|

| Inverts RGB channels of OpenGL Texture | |

| Lift Shader |

|

| Change the Lift using a GPU Shader | |

| Max Shaders |

|

| Find Max of 2 Images Together using a GPU Shader | |

| Min Shaders |

|

| Find Min of 2 Images Together using a GPU Shader | |

| Multiply Shaders |

|

| Multiply 2 Images Together using a GPU Shader | |

| Primatte AI |

|

| Primatte AI | |

| Reverse Geo Api |

|

| Reverse Geo Api The following package is required: geopy |

|

| Sobel Shader |

|

| Applies Soberl Edge Filter | |

| Stock Price |

|

| Stock Price using Yahoo Finance The following package is required: yfinance |

|

| Subtract Shaders |

|

| Subtract 2 Images Together using a GPU Shader | |

| Texture Output |

| Outputs Native Viewer | ||

| Transform Shader |

|

| Transform 2D Shader using a GPU Shader | |

| Texture Upload |

|

| Uploads CPU System Memory to GPU Texture Memory | |

| Vertical Ramp |

|

| Change Color Texture with Vertical Ramp using a GPU Shader |

The Image Processing Filters tools are all under the Image Processing category. The full list of display tools is as follows:

| Name | Icon | Inputs | Outputs | Description |

|---|---|---|---|---|

| Bilateral Filter |

|

| Applies bilateral filter to image | |

| Blur |

|

| Blurs an image using the normalized box filter | |

| Box Filter |

|

| Blurs an image using the box filter | |

| Build Pyramid |

|

| Constructs the Gaussian pyramid for an image. 4 images outputted. | |

| Cam Shift |

|

| Finds the rotated rectangle with the maximum number of points. When the object moves, the movement is reflected in the meanshift algorithm | |

| Canny Edge Detector |

|

| Canny Edge Detection is a popular edge detection algorithm | |

| Convert Depth |

|

| Convert Depth Precision between 8u, 8s, 16u, 16s, and 32f | |

| Bandpass filter |

|

| Band Pass filter to blur and sharpen | |

| Detect Circles |

|

| Detects circles in a grayscale image using the Hough transform. | |

| Detect Lines |

|

| Detects lines in a grayscale image using the Hough transform. | |

| Dilate |

|

| Blur and dilate image with vertical and horizontal blur | |

| Dilate 3x3 |

|

| Blur image based on maximum luminance value of surrounding pixels | |

| Erode |

|

| Blur and erode image with vertical and horizontal blur | |

| Erode 3x3 |

|

| Blur image based on minimum luminance value of surrounding pixels. 3x3 pixels are blurred at a time. | |

| Gauss |

|

| Gauss Filter on Cuda Buffer | |

| High Pass |

|

| High Pass Filter on Cuda Buffer | |

| Iterative Blur |

|

| Blur image using iterative 3x3 blurs | |

| Laplace |

|

| Laplace Filter on Cuda Buffer | |

| Low Pass |

|

| Low Pass Filter on Cuda Buffer | |

| Median Blur |

|

| Blur image using median 3x3 blurs | |

| Morph Gradient Border |

|

| Morphological dilated pixel result minus morphological eroded pixel result with border control. | |

| Prewitt |

|

| Combination of Prewitt Horiz and Prewitt Vert on Cuda Buffer | |

| Roberts |

|

| Combination of Roberts Filter Down and Roberts Filter Up on Cuda Buffer | |

| Separable Blur |

|

| Blur image with vertical and horizontal blur | |

| Sharpen |

|

| Filters the Cuda Buffer using a sharpening filter kernel | |

| Sobel |

|

| Combination of Sobel Horiz and Sobel Vert on Cuda Buffer | |

| Delay |

|

| Shows a Delayed Image | |

| Dilate |

|

| Dilates an image (expands the primary object) by using a specific structuring element that determines the shape of a pixel neighborhood over which the maximum is taken | |

| Erode |

|

| Erodes an image (shrinks the primary object) by using a specific structuring element that determines the shape of a pixel neighborhood over which the minimum is taken | |

| Filter 2D |

|

| Convolves an image with the kernel, applying an arbitrary linear filter to an image | |

| Find Contours |

|

| Finds contours in a binary image | |

| Frequency Bandpass |

|

| Applies a bandpass filter to a 1D or 2D floating-point array | |

| Detect Circles |

|

| Detects circles in a grayscale image using the Hough transform. | |

| Detect Lines |

|

| Detects lines in a grayscale image using the Hough transform. | |

| Laplacian Edge Detector |

|

| Laplacian Edge Detect | |

| Mean Shift |

|

| Finds the rectangle with the maximum number of points. When the object moves, the movement is reflected in the meanshift algorithm | |

| Mean Color |

|

| Calculates an average (mean) value of array elements, independently for each channel | |

| Mean Mask |

|

| Calculates an average (mean) value of array elements for a grayscale image | |

| Median Blur |

|

| Blurs an image using the median filter | |

| Morphological Skeleton |

|

| Create compact representation of image using skeleton. | |

| Morphological Ex |

|

| Performs advanced morphological transformations using an erosion and dilation as basic operations. | |

| Morph Hit or Miss |

|

| Applies kernel onto binary input image to produce 1 channel output image of all pixels that match the kernel's pattern. | |

| Pyr Down |

|

| Blurs an image and downsamples it | |

| Pyr Up |

|

| Upsamples an image and then blurs it | |

| Radon Transform |

|

| Canny Edge Detection is calculates the projection of an image's intensity along lines at specific angles. | |

| Scharr Edge Detector |

|

| Scharr Edge Detect | |

| Sep Filter 2D Gabor |

|

| Applies a separable linear filter to an image | |

| Sep Filter 2D Gaussian |

|

| Applies a separable linear filter to an image | |

| Sobel |

|

| Detects edges by calculating the first, second, third, or mixed image derivatives using an extended Sobel operator | |

| Spatial Gradient |

|

| Calculates the first order image derivative in both x and y using a Sobel operator, which emphasizes regions of high spatial frequency that correspond to edges. | |

| Sqr Box Filter |

|

| Blurs an image using the box filter by calculating the normalized sum of squares of the pixel values overlapping the filter | |

| Stack Blur |

|

| Blurs an image by creating a kind of moving stack of colors whilst scanning through the image | |

| Get Structuring Element |

|

| Returns a structuring element of the specified size and shape for morphological operations. | |

| Sum Color |

|

| Calculates and returns the sum of array elements, independently for each channel | |

| Sum Mask |

|

| Calculates and returns the sum of array elements for a grayscale image | |

| Create Super Pixel |

|

| Initializes a SuperpixelLSC (Linear Spectral Clustering) object for the input image. |

The Grayscale Filters tools are all under the Grayscale category. The full list of display tools is as follows:

| Name | Icon | Inputs | Outputs | Description |

|---|---|---|---|---|

| Adaptive Threshold |

|

| The function is typically used to get a bi-level (binary) image out of a grayscale image | |

| Adaptive Binary Threshold |

|

| The function is typically used to get a bi-level (binary) image out of a grayscale image | |

| Adaptive Binary Inverse Threshold |

|

| The function is typically used to get a bi-level (binary inverse) image out of a grayscale image | |

| Chroma Keyer |

|

| Chroma Key of Cuda Buffer | |

| CIELAB Threshold |

|

| CIELAB Threshold of Cuda Buffer | |

| Grayscale |

|

| Grayscale of Cuda Buffer | |

| Hue Threshold |

|

| Hue Threshold of Cuda Buffer | |

| RGB Threshold |

|

| RGB Threshold of Cuda Buffer | |

| Grayscale |

|

| Convert to grayscale | |

| Color In Range |

|

| Threshold if between min and max | |

| Mask Brightness |

|

| Change the Brightness thorugh a Look Up Table (L.U.T.) for a Mask | |

| Mask Circles |

|

| Draws Circles with Masks | |

| Mask Contrast |

|

| Change the Contrast thorugh a Look Up Table (L.U.T.) for a Mask | |

| Mask Curve |

|

| Create a Curve Mask Thorugh a Look Up Table (L.U.T.) | |

| Mask Gamma |

|

| Change the Gamma thorugh a Look Up Table (L.U.T.) for a Mask | |

| Invert Mask |

|

| Inverts the Mask using Bitwise Not | |

| Mask Lift |

|

| Lifts the Brightness thorugh a Look Up Table (L.U.T.) for a Mask | |

| Mask Paths |

|

| Draws Paths with Masks | |

| Mask Rectangles |

|

| Draws Rectangles with Masks | |

| Mask Shapes |

|

| Draws Lines, Circles, and/or Rectangles with Masks | |

| Threshold |

|

| The function is typically used to get a bi-level (binary) image out of a grayscale image | |

| Threshold Binary |

|

| The function is typically used to get a bi-level (binary) image out of a grayscale image | |

| Threshold Binary Inverse |

|

| The function is typically used to get a bi-level (binary inverse) image out of a grayscale image | |

| Mask In Range |

|

| Mask Threshold if between min and max | |

| Threshold Mask |

|

| The function is typically used to get a bi-level (mask) image out of a grayscale image | |

| Threshold Otsu |

|

| The function is typically used to get a bi-level (binary inverse) image out of a grayscale image | |

| Threshold To Zero |

|

| The function is typically used to get a bi-level (to zero) image out of a grayscale image | |

| Threshold To Zero Inverse |

|

| The function is typically used to get a bi-level (to zero inverse) image out of a grayscale image | |

| Threshold Triangle |

|

| The function is typically used to get a bi-level (triangle) image out of a grayscale image | |

| Threshold Trunc |

|

| The function is typically used to get a bi-level (binary inverse) image out of a grayscale image |

The Source Inputs tools are all under the Inputs category. The full list of display tools is as follows:

| Name | Icon | Inputs | Outputs | Description |

|---|---|---|---|---|

| Folder Reader |

|

| Reads filepaths from a folder | |

| Grayscale Image Reader |

|

| Reads Grayscale Images from a file | |

| Image Reader |

|

| Reads Images from a file | |

| JSON File Reader |

|

| Reads from a JSON file | |

| Movie Reader |

|

| Reads Images from a movie | |

| Solid Color Image |

|

| Output a RGB, RGBA, or Alpha-only image. | |

| Solid Color Image |

|

| Output a RGB, RGBA, or Alpha-only image. | |

| Take Picture |

|

| Takes a picture | |

| Text |

|

| Outputs text as an image | |

| Text File Reader |

|

| Reads from a text file | |

| Webcam |

|

| Reads Images from a webcamera | |

| YouTube Reader |

|

| Streams data from a YouTube video The following package is required: youtube_reader |

The Logic functions tools are all under the Logic category. The full list of display tools is as follows:

| Name | Icon | Inputs | Outputs | Description |

|---|---|---|---|---|

| And |

|

| Logical AND operator | |

| Conditional Color Operator |

|

| Outputs one of the selected inputs | |

| Conditional Double2 Operator |

|

| Outputs one of the selected inputs | |

| Conditional Double3 Operator |

|

| Outputs one of the selected inputs | |

| Conditional Image2D Operator |

|

| Outputs one of the selected inputs | |

| Conditional Int2 Operator |

|

| Outputs one of the selected inputs | |

| Conditional Int3 Operator |

|

| Outputs one of the selected inputs | |

| Conditional Matrix Operator |

|

| Outputs one of the selected inputs | |

| Conditional Numeric Operator |

|

| Outputs one of the selected inputs | |

| Conditional String Operator |

|

| Outputs one of the selected inputs | |

| False |

| Returns False | ||

| Numeric a == b |

|

| Return if inputs are equal | |

| Numeric a > b |

|

| Return if input a > input b | |

| Numeric a >= b |

|

| Return if input a >= input b | |

| Numeric a < b |

|

| Return if input a < input b | |

| Numeric a <= b |

|

| Return if input a <= input b | |

| Numeric Compare a != b |

|

| Return if inputs are not equal | |

| Or |

|

| Logical OR operator | |

| Range |

|

| Return if number is in range | |

| True |

| Returns True |

The Math functions tools are all under the Math category. The full list of display tools is as follows:

| Name | Icon | Inputs | Outputs | Description |

|---|---|---|---|---|

| Abs |

|

| Return absolute value | |

| Arccos |

|

| Return inverse cosine of input x, result is in degrees | |

| Arccosh |

|

| Return inverse hyperbolic cosine of input x | |

| Arcsin |

|

| Return inverse sine of input x, result is in degrees | |

| Arcsinh |

|

| Return inverse hyperbolic sine of input x | |

| Atan2 |

|

| Return inverse hyperbolic tangent of input x | |

| Arctanh |

|

| Return archtan(a) | |

| Ceil |

|

| Return ceil(x) | |

| Cos |

|

| Return cosine of input x (where x is in degrees) | |

| Cosh |

|

| Return hyperbolic cosine of input x | |

| Counter Double |

|

| Counts numbers | |

| Counter Int |

|

| Counts numbers | |

| Divide |

|

| Return a/b | |

| e |

| Returns eulers number | ||

| Exponential |

|

| Return e^x | |

| Floor |

|

| Return floor(x) | |

| Log |

|

| Return log(x,base) | |

| Minus |

|

| Return a-b | |

| Mod |

|

| Return mod(a,b) | |

| Multiply |

|

| Return a*b | |

| One |

| Return number one | ||

| PI |

| Return PI | ||

| Plus |

|

| Return a+b | |

| Power |

|

| Return a^b | |

| Random Number |

|

| Return random number | |

| Sin |

|

| Return sine of input x (where x is in degrees) | |

| Sinh |

|

| Return hyperbolic sine of input x | |

| Sqrt |

|

| Return square root of x | |

| Square |

|

| Return x^2 | |

| Tan |

|

| Return tangent of input x (where x is in degrees) | |

| Tanh |

|

| Return hyperbolic tangent of input x | |

| Zero |

| Return number zero |

The Matrix operations tools are all under the Matrix category. The full list of display tools is as follows:

| Name | Icon | Inputs | Outputs | Description |

|---|---|---|---|---|

| Abs |

|

| Calculate the absolute value element-wise | |

| Add |

|

| Add arguments element-wise | |

| All |

|

| Test whether all array elements evaluate to True | |

| All Close |

|

| Returns if all elements x1 and x2 are within 1e-5 of one another (not suited for very small-valued matrices) | |

| Any |

|

| Test whether any array element evaluates to True | |

| Arange |

|

| Return values spaced by step within a given interval [start, stop] | |

| Per Element Comparison |

|

| Performs the per-element comparison of two arrays or an array and scalar value. When the comparison result is true, the corresponding element of output array is set to 255 | |

| Matrix Concatenate |

|

| Concatentate matrices | |

| Cross Product |

|

| Returns the cross product of 3-element vectors | |

| Determinant |

|

| Compute the determinant of an array | |

| Divide |

|

| Divide arguments element-wise | |

| Dot Product |

|

| Dot product of two vectors | |

| Eigen |

|

| Calculates eigenvalues and eigenvectors of a matrix | |

| Equal |

|

| Return (x1 == x2) element-wise | |

| Identity |

|

| Return a 2-D array with ones on the diagonal and zeros elsewhere. In other words, an identity matrix of size n | |

| HStack |

|

| Stack arrays in sequence horizontally (column wise). All input arrays have the same shape except for the 2nd axis | |

| Index |

|

| Accesses an array at a given matrix index | |

| Integral |

|

| Calculates the integral of an image | |

| Inverse Matrix |

|

| Finds the inverse or pseudo-inverse of a matrix | |

| Linspace |

|

| Returns num evenly spaced samples, calculated over the interval [start, stop] | |

| Least Squares |

|

| Return the least-squares solution to a linear matrix equation. Computes the vector x that approximately solves ax = b | |

| Matrix Multiply |

|

| Calculates the matrix multiplication of two arrays | |

| Max |

|

| Return the maximum of an array | |

| Mean |

|

| Compute the arithmetic mean | |

| Min |

|

| Return the minimum of an array | |

| Per Element Multiply |

|

| Calculates the per-element scaled product of two arrays | |

| Norm |

|

| Calculates the absolute norm of an array | |

| Eigen |

|

| Compute the eigenvalues and right eigenvectors of a square array | |

| Inverse Matrix |

|

| Compute the inverse of a square matrix | |

| Matrix Multiply |

|

| Matrix dot product of two arrays | |

| Per Element Multiply |

|

| Multiply arguments element-wise | |

| Norm |

|

| Matrix or vector norm. Frobenius norm for matrices, L2 norm for vectors. | |

| Scalar Multiply |

|

| Multiply matrix with scalar value | |

| Trace |

|

| Return the sum along diagonals of the array | |

| Transpose |

|

| Returns an array with axes transposed | |

| Matrix Ones |

|

| Return an array of filled with ones given shape and type | |

| Outer Product |

|

| Compute the outer product of two vectors | |

| Power |

|

| Raise a square matrix to the power n | |

| Pseudo Inverse Matrix |

|

| Compute the (Moore-Penrose) pseudo-inverse of a matrix | |

| QR Factorization |

|

| Compute the qr factorization of a matrix. Factor the matrix a as qr, where q is orthonormal and r is upper-triangular. | |

| Matrix Random |

|

| Random matrices | |

| Matrix Random Normal Distribution |

|

| Random matrices with values chosen from the “standard normal” distribution | |

| Rank |

|

| Return matrix rank of array using SVD method | |

| Relative Norm |

|

| Calculates an absolute difference norm or a relative difference norm of two arrays | |

| Reshape |

|

| Gives a new shape to an array without changing its data | |

| Select |

|

| Sets the output matrix to the value from the first input matrix if corresponding value of mask matrix is 255, or value from the second input matrix (if value of mask matrix set to 0) | |

| Shape |

|

| Return the shape of an array | |

| Matrix Size |

|

| Gives the matrix number of elements | |

| Solve |

|

| Solve a linear matrix equation, or system of linear scalar equations. Computes the exact solution x of ax = b | |

| Split |

|

| Split an array into multiple sub-arrays based on indices. For example, indices 2 and 3 returns array[:2], array[2:3], and array[3:] | |

| Sqrt |

|

| Return the non-negative square-root of an array, element-wise | |

| Standard Deviation |

|

| Returns the standard deviation of the elements | |

| Subtract |

|

| Subtract arguments element-wise | |

| Sum |

|

| Sum of array elements | |

| SVD |

|

| Singular Value Decomposition | |

| Trace |

|

| Returns the trace of a matrix, the sum of its diagonal elements | |

| Transpose |

|

| Transposes a matrix | |

| VStack |

|

| Stack arrays in sequence vertically (row wise). All input arrays have the same shape except for the 1st axis | |

| Where Matrix Filter |

|

| Return elements chosen from x or y depending on condition. If condition is True, return element from x, otherwise return y | |

| Matrix Zeros |

|

| Return an array of filled with zeros given shape and type |

The Machine Learning filters based using scikit-learn tools are all under the ML category. The full list of display tools is as follows:

| Name | Icon | Inputs | Outputs | Description |

|---|---|---|---|---|

| Bernoulli NB Classifier |

|

| Bernoulli Naive Bayes Classifier model | |

| Categorical NB Classifier |

|

| Categorical Naive Bayes Classifier model | |

| Complement NB Classifier |

|

| Complement Naive Bayes Classifier model | |

| Gaussian NB Classifier |

|

| Gaussian Naive Bayes Classifier model | |

| Gaussian Process Classifier |

|

| Gaussian Process Classifier model | |

| Gaussian Process Regressor |

|

| Gaussian Process Regressor model | |

| KNeighbors Classifier |

|

| KNeighbors Classifier model | |

| KNeighbors Regressor |

|

| KNeighbors Regressor model | |

| Lasso Regressor |

|

| Lasso model | |

| Linear Regression |

|

| Linear regression model | |

| Linear SVC Model |

|

| Linear Support Vector Classifier model | |

| Logistic Regression |

|

| Logistic regression model | |

| MSE |

|

| Calculates Mean Square Error | |

| MLP Neural Network |

|

| Neural Network MLP Classifier model | |

| Load ML Model |

|

| Load a ML model from a designated file | |

| Model Predict |

|

| Predicts test data using model | |

| Save ML Model |

| Save a ML model to a designated file | ||

| Multinomial NB Classifier |

|

| The multinomial Naive Bayes classifier is suitable for classification with discrete features (e.g., word counts for text classification). | |

| Nearest Centroid |

|

| Nearest Centroid Classifier model | |

| Optical Character Recognition |

|

| Reads text in an image using EasyOCR The following package is required: ocr |

|

| R2 Score |

|

| Calculates R2 Score | |

| Random Forest Model |

|

| Random Forest Classifier model | |

| Ridge Classifier |

|

| Ridge Classifier model | |

| Ridge Regressor |

|

| Ridge Regressor model | |

| SGD Classifier |

|

| Stochastic Gradient Descent Classifier model | |

| SGD Regressor |

|

| Stochastic Gradient Descent Regressor model | |

| SVC Model |

|

| Support Vector Classifier model | |

| Tensorboard Visualization |

|

| Visualizes machine learning training processes using Tensorboard | |

| Train Test Split |

|

| Returns train test split. |

The Display tools that allow you to visualize directly in the flowgraph tools are all under the Outputs category. The full list of display tools is as follows:

| Name | Icon | Inputs | Outputs | Description |

|---|---|---|---|---|

| Bool Display |

| Show Bool | ||

| Color Display |

| Show Color | ||

| DataFrame Display |

| DataFrame Viewer | ||

| Double2 Display |

| Show Double2 | ||

| Double3 Display |

| Show Double3 | ||

| Double Display |

| Show Double Number | ||

| Full Image Display |

| Show Full image | ||

| Fullscreen |

| Show Image | ||

| Full Image Display |

| Show Full Image | ||

| imshow Display |

| imshow Image Viewer - only displays on the server | ||

| Int2 Display |

| Show Int2 | ||

| Int3 Display |

| Show Int3 | ||

| Int Display |

| Show Int | ||

| Matrix2D Display |

| Show Matrix2D | ||

| String Display |

| Show String | ||

| Tensor Display |

| Tensor Viewer | ||

| Thumbnail Image Display |

| Show Thumbnail of Image | ||

| Thumbnail Image Display |

| Show Thumbnail of Image |